How many Radeon RX 6700XT graphics cards did AMD produce? Well, at least one.Sam Machkovech

How many Radeon RX 6700XT graphics cards did AMD produce? Well, at least one.Sam Machkovech One HDMI 2.1 connection, and three DisplayPort connections. Notice that this lacks the USB Type-C connection found in its "Big Navi" siblings.

One HDMI 2.1 connection, and three DisplayPort connections. Notice that this lacks the USB Type-C connection found in its "Big Navi" siblings. Power: one eight-pin, one six-pin.

Power: one eight-pin, one six-pin. Unscrew if you think you have a better idea for a backplate, I suppose.

Unscrew if you think you have a better idea for a backplate, I suppose. Unboxing.

Unboxing. Ready for unpacking and installation.

Ready for unpacking and installation.

Look, I'll level with you: reviewing a GPU amidst a global chip shortage is ludicrous enough to count as dark comedy. Your ability to buy new, higher-end GPUs from either Nvidia or AMD has been hamstrung for months—a fact borne out by their very low ranks on Steam's gaming PC stats gathered around the world.

As of press time, AMD's latest "Big Navi" GPUs barely make a ripple in Steam's list. That's arguably a matter of timing, with their November 2020 launch coming two months after Nvidia began shipping its own 3000-series GPUs. But how much is that compounded by low supplies and shopping bots? AMD isn't saying, and on the eve of the Radeon RX 6700's launch, the first in its "Little Navi" line, the company's assurances aren't entirely comforting.

In an online press conference ahead of the launch, AMD Product Manager Greg Boots offered the usual platitudes: "a ton of demand out there," "we're doing everything we can," that sort of thing. He mentioned a couple of AMD's steps that may help this time around. For one, AMD's "reference" GPU model is launching simultaneously with partner cards, so if the inventory is actually out there (we certainly don't know), that at least puts higher numbers of GPUs in the day-one pool. Also, Boots emphasized stock being made available specifically for brick-and-mortar retailers—though he didn't offer a ratio of how many GPUs are going to those shops, compared to online retailers.

But then he offered an assurance that sent my eyebrow to the ceiling: "We're working with retailers to prevent bots, like we did with the 6800."

I'm sorry, what?!

Moments later, Boots seemed to admit defeat: "At the end of the day, [retail partners] are either going to make use of that or not. That's up to them."

None of this makes AMD particularly special as a GPU manufacturer, mind you. Nvidia hasn't been a glowing steward of bot prevention or GPU supply control, either, and rapid-fire sellouts of its most recent GPU launch, February's RTX 3060, proved that out. In either manufacturer's case, we're going to have to play this broken record of "can you actually buy it?!" madness before every review until things change.

In particular, the typical "bang for the buck" conversation is currently moot. MSRP, or "manufacturer's suggested retail price," doesn't mean diddly in an eBay wasteland; your least favorite five-star retailer couldn't give two hoots about what AMD or Nvidia suggest, so long as desperate gamers and ethereum miners put the supply-and-demand curve in scalpers' favor.

Make sure to leave milk and CUDA cores

Despite these market realities, MSRP at least gives us a sense of each GPU's intended range, and the $449 RX 6700XT lands closest to Nvidia's RTX 3060 Ti, "priced" at $399. If you'd like to read this review in a classic Sears catalog way, dreaming that St. GPU Nicholas might one day fill your stocking with teraflops, this head-to-head showdown makes the most sense as a story of Team Red vs. Team Green—both focusing on high-end 1440p performance with these products, not 4K.

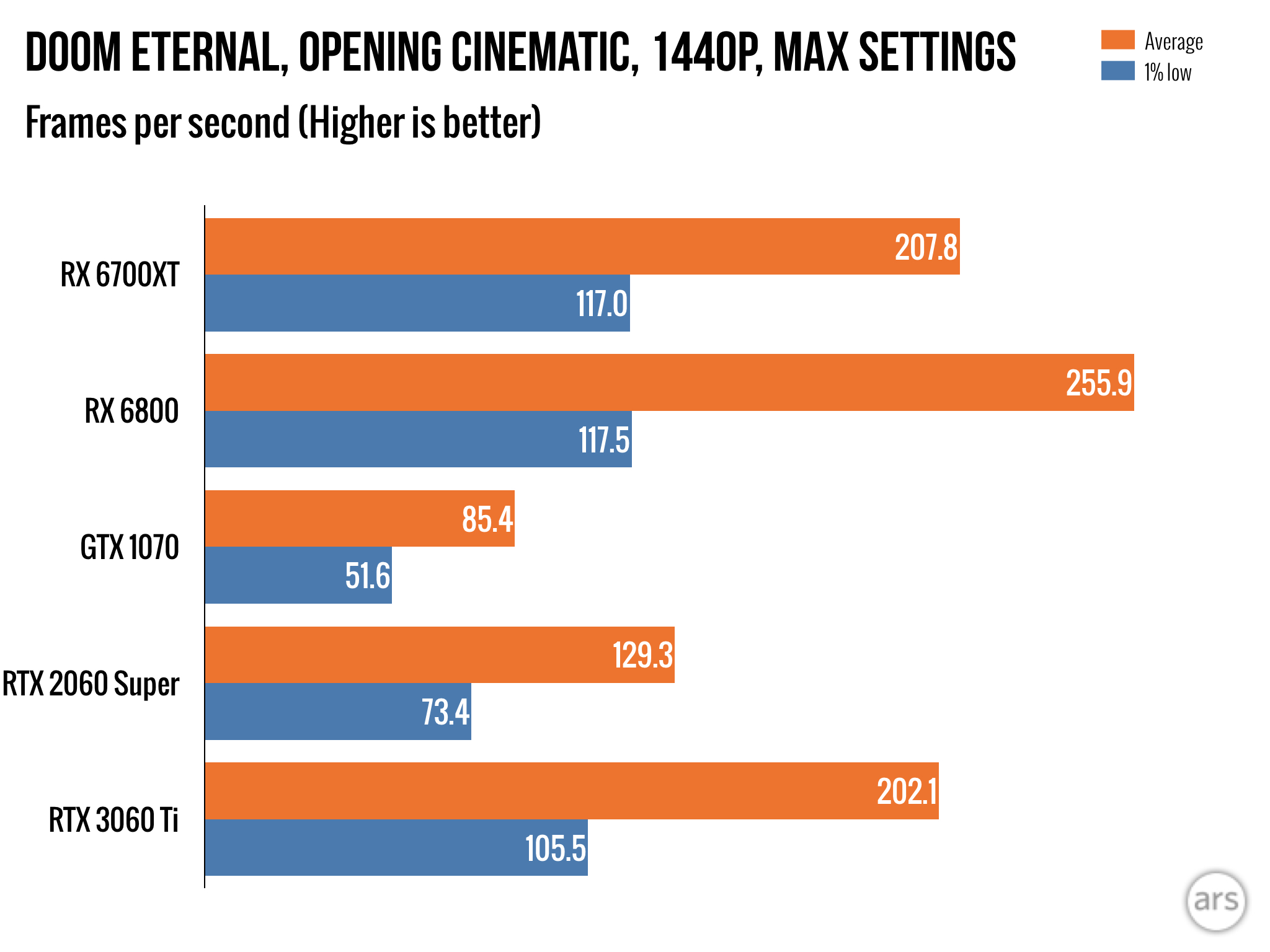

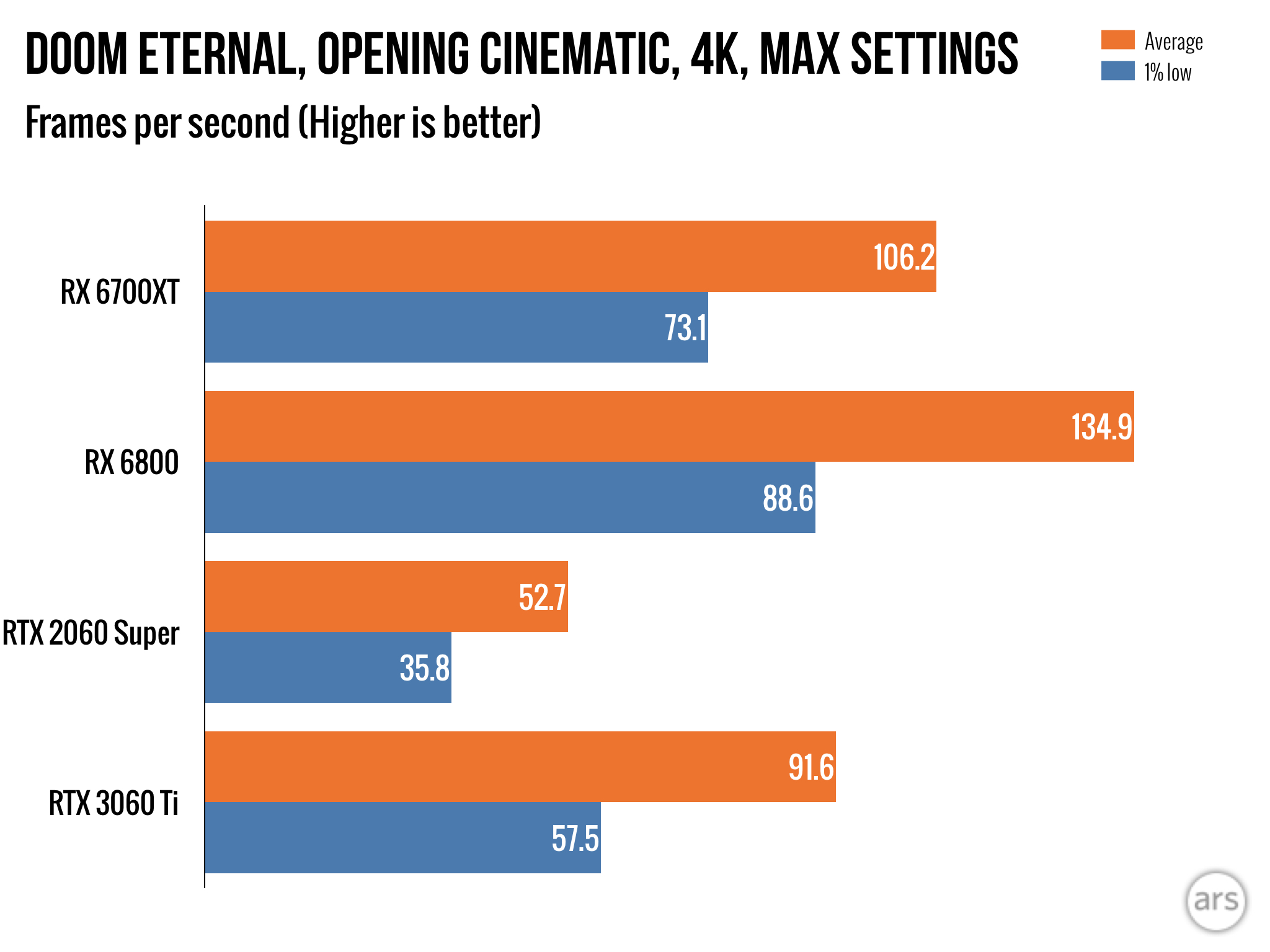

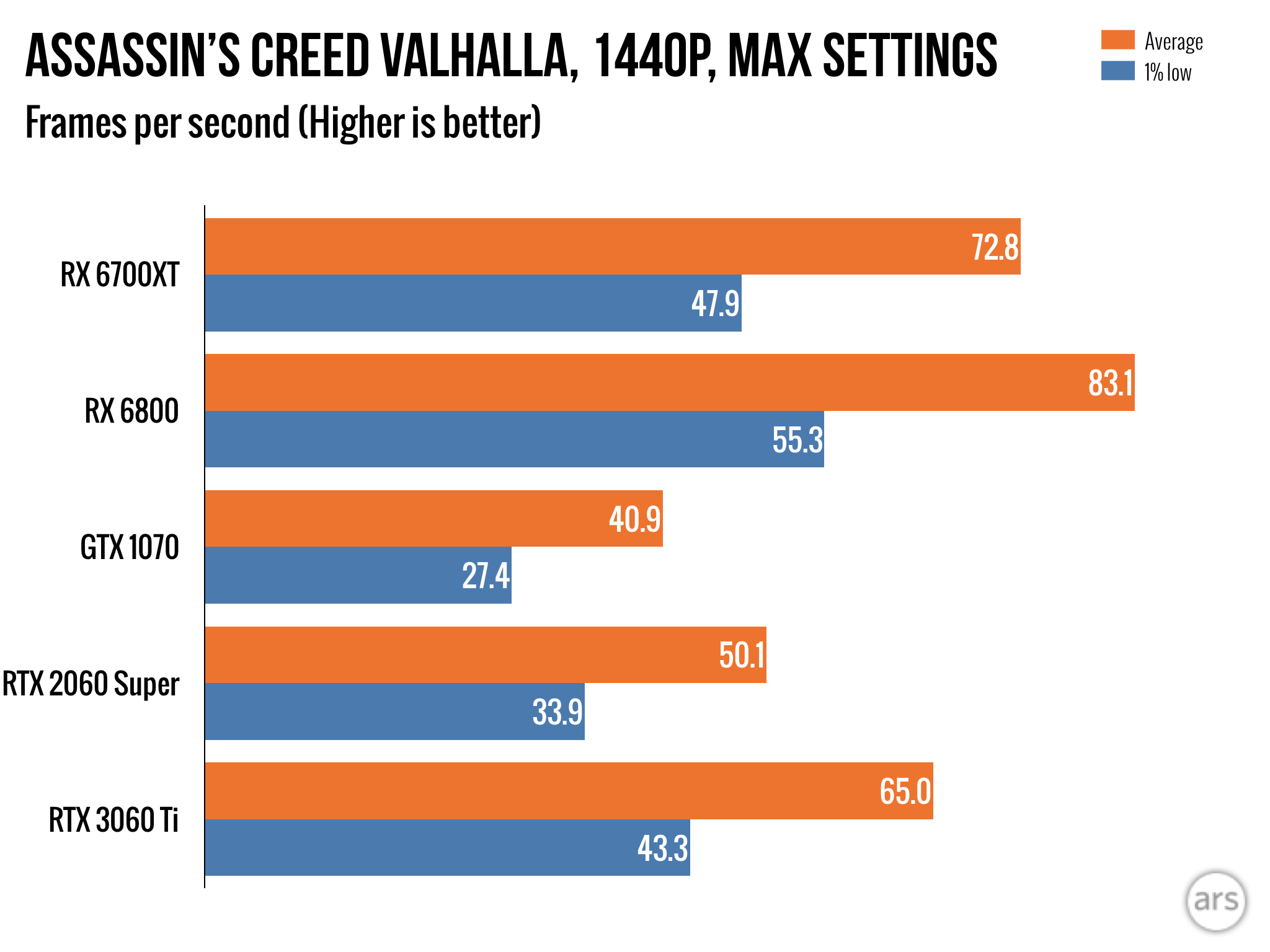

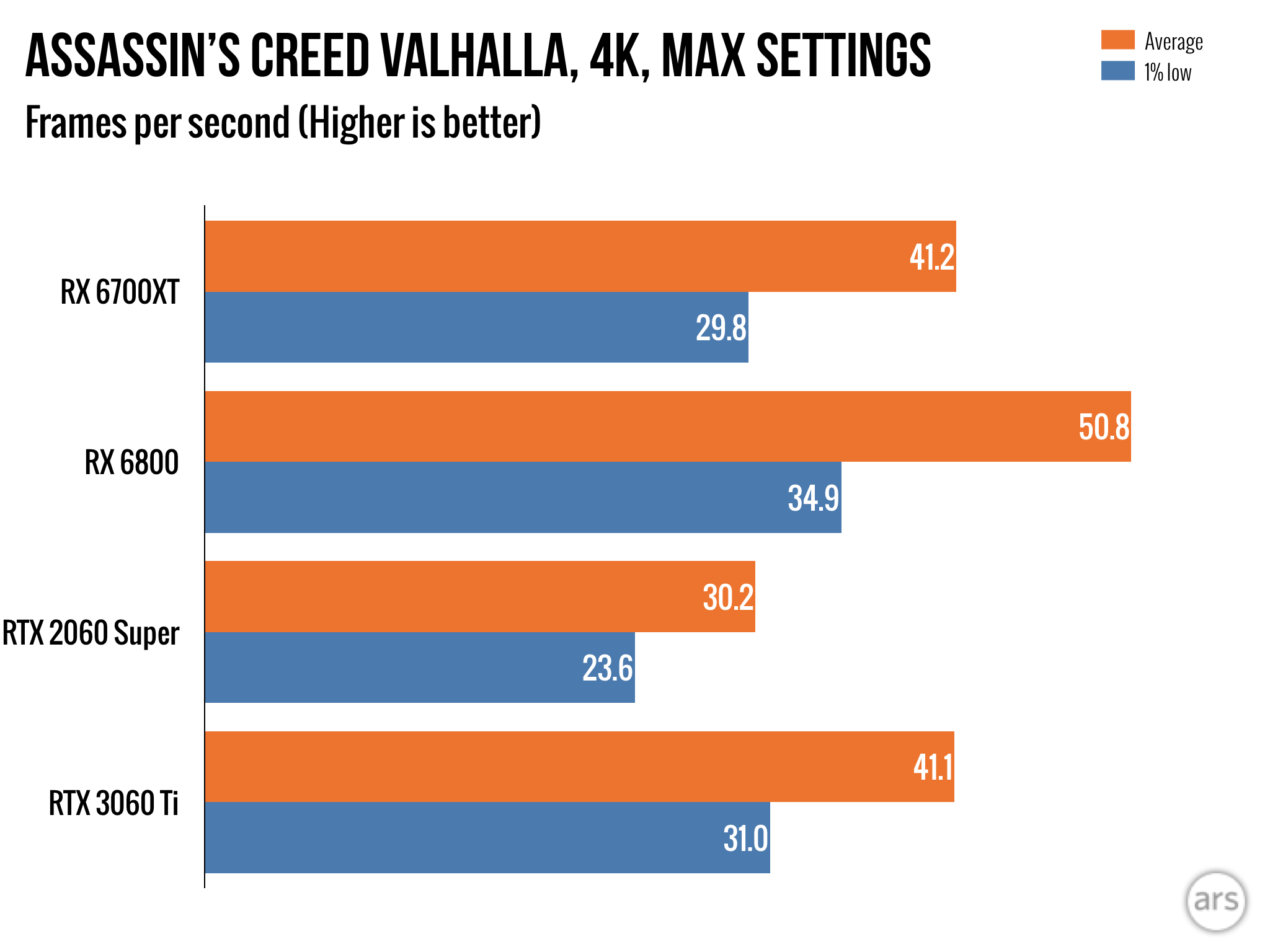

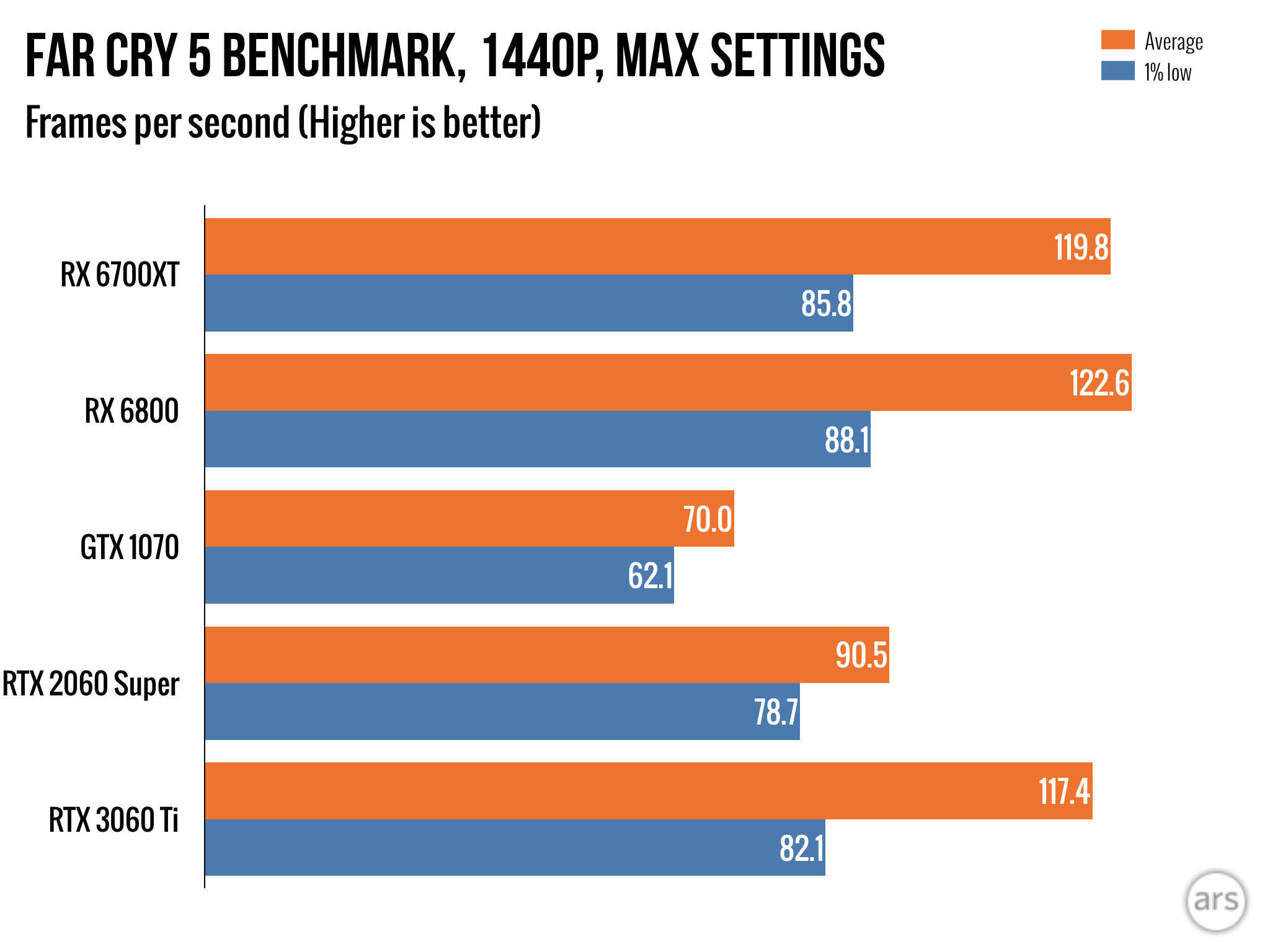

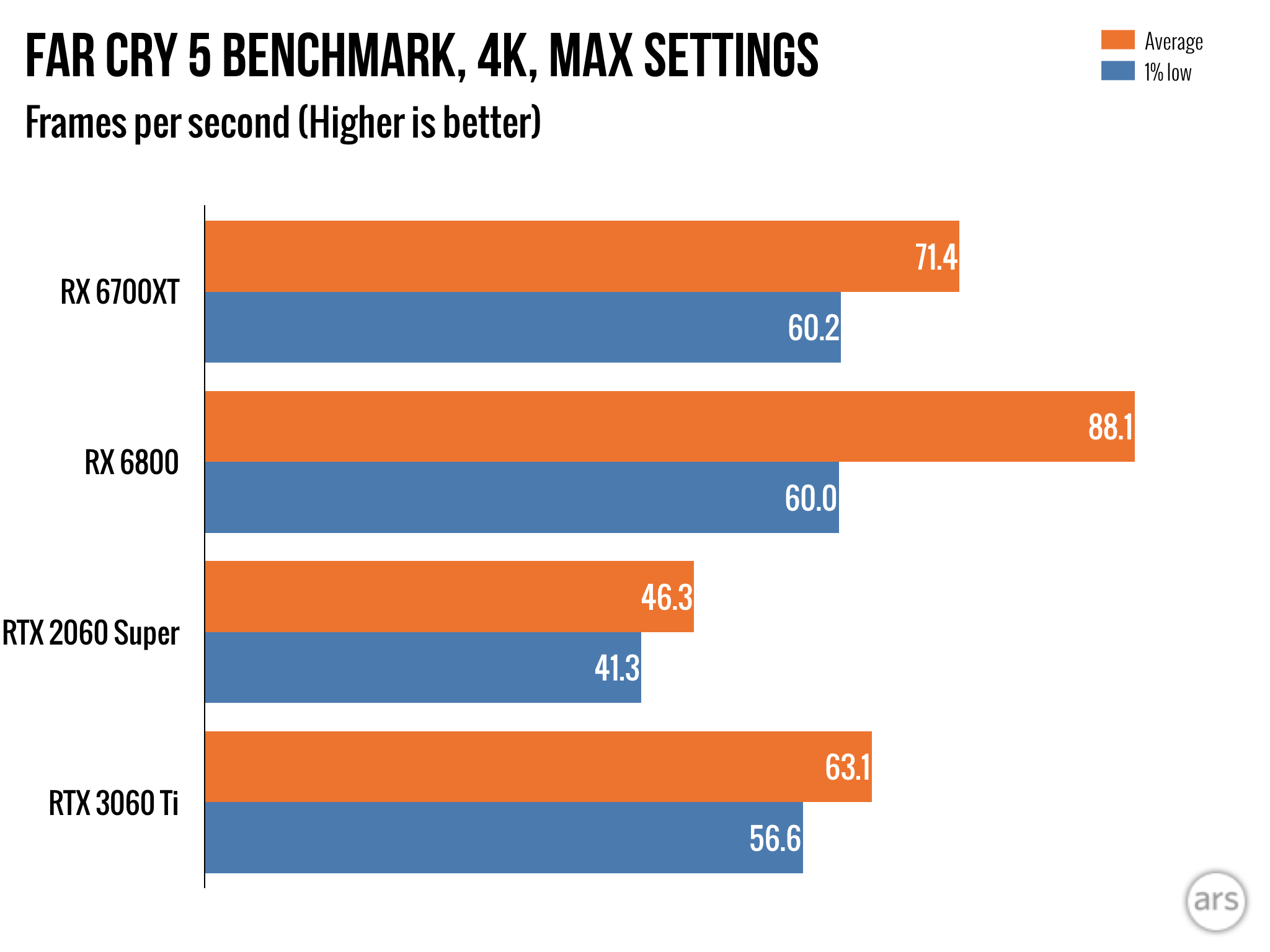

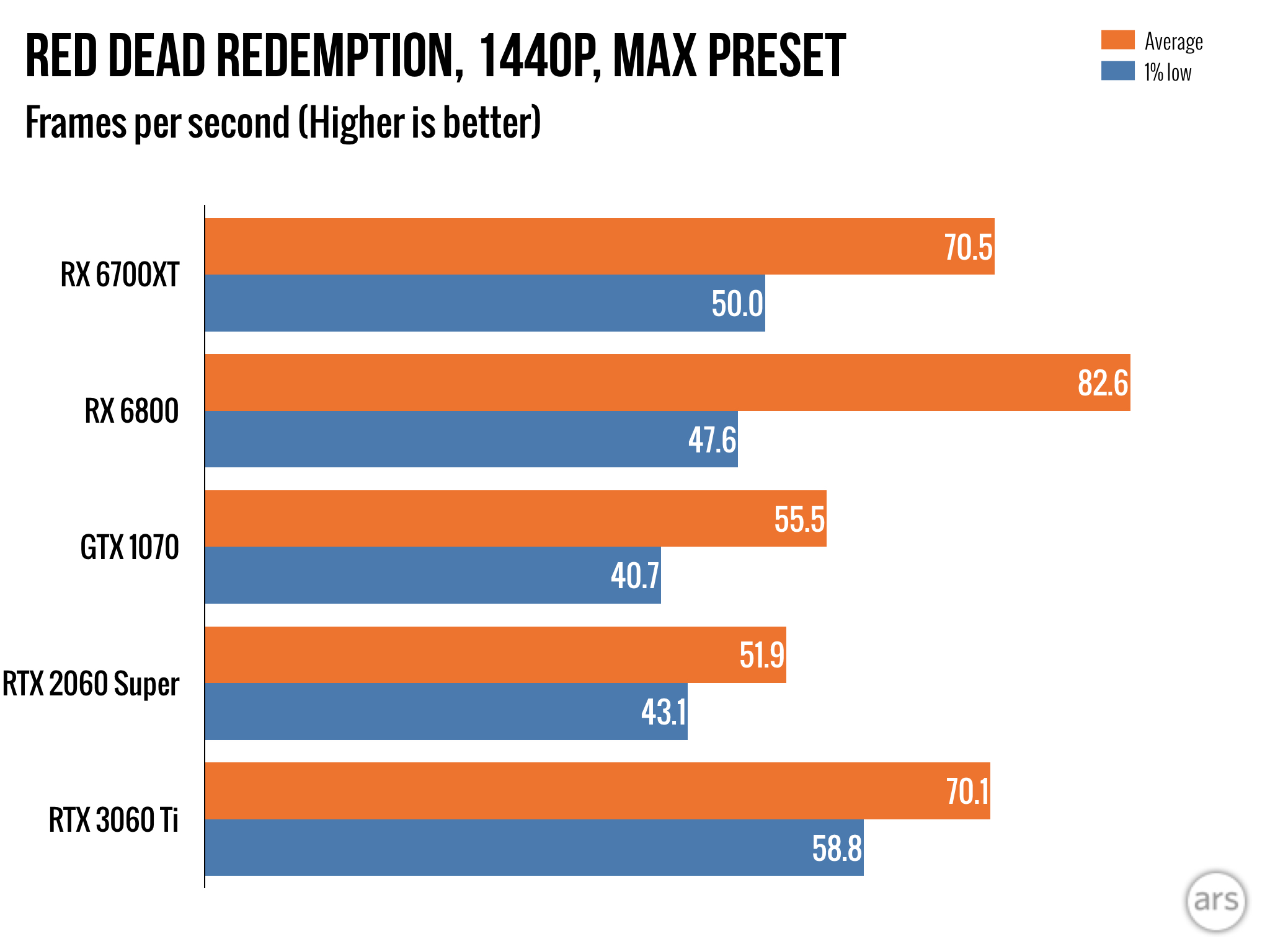

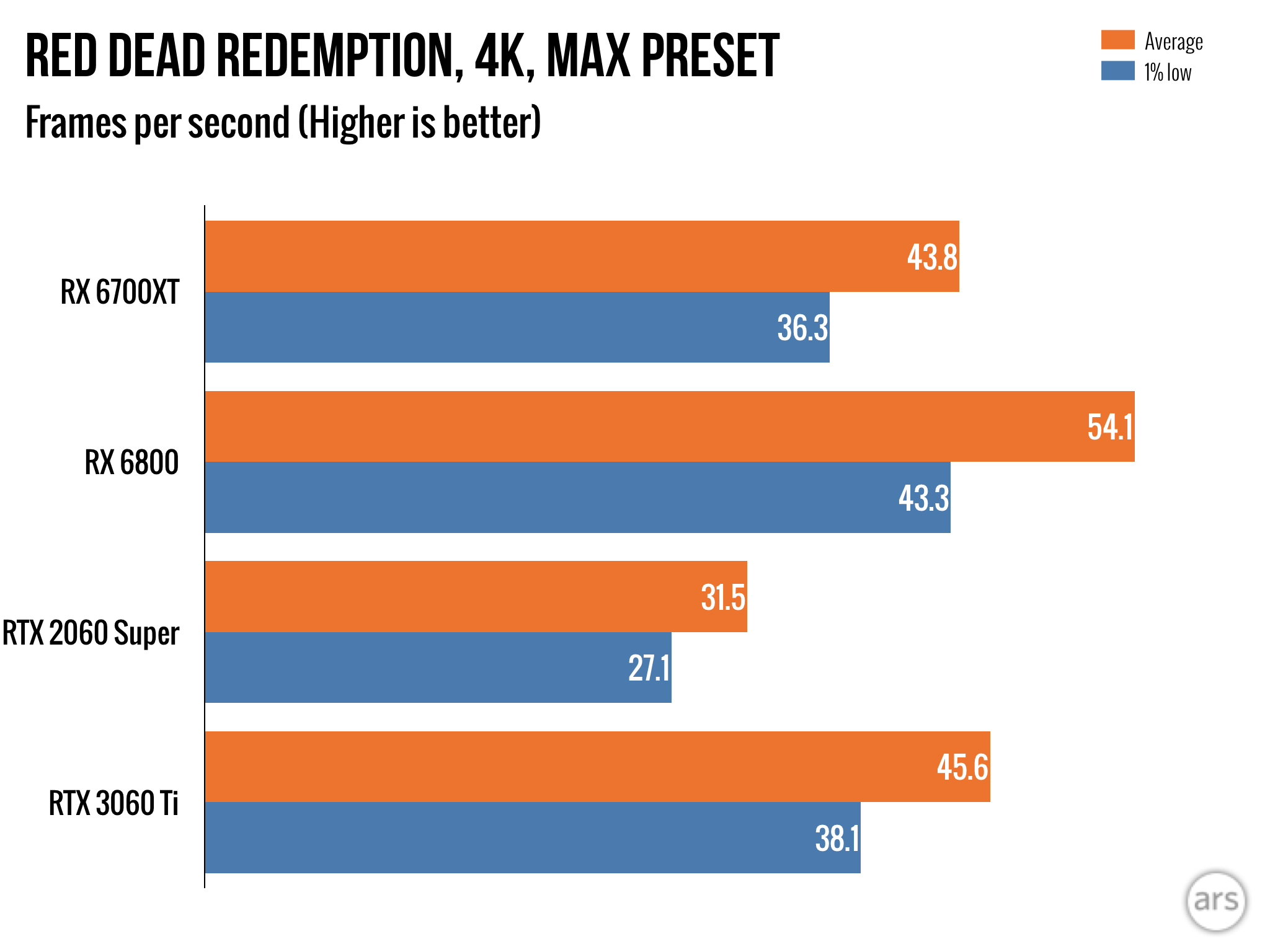

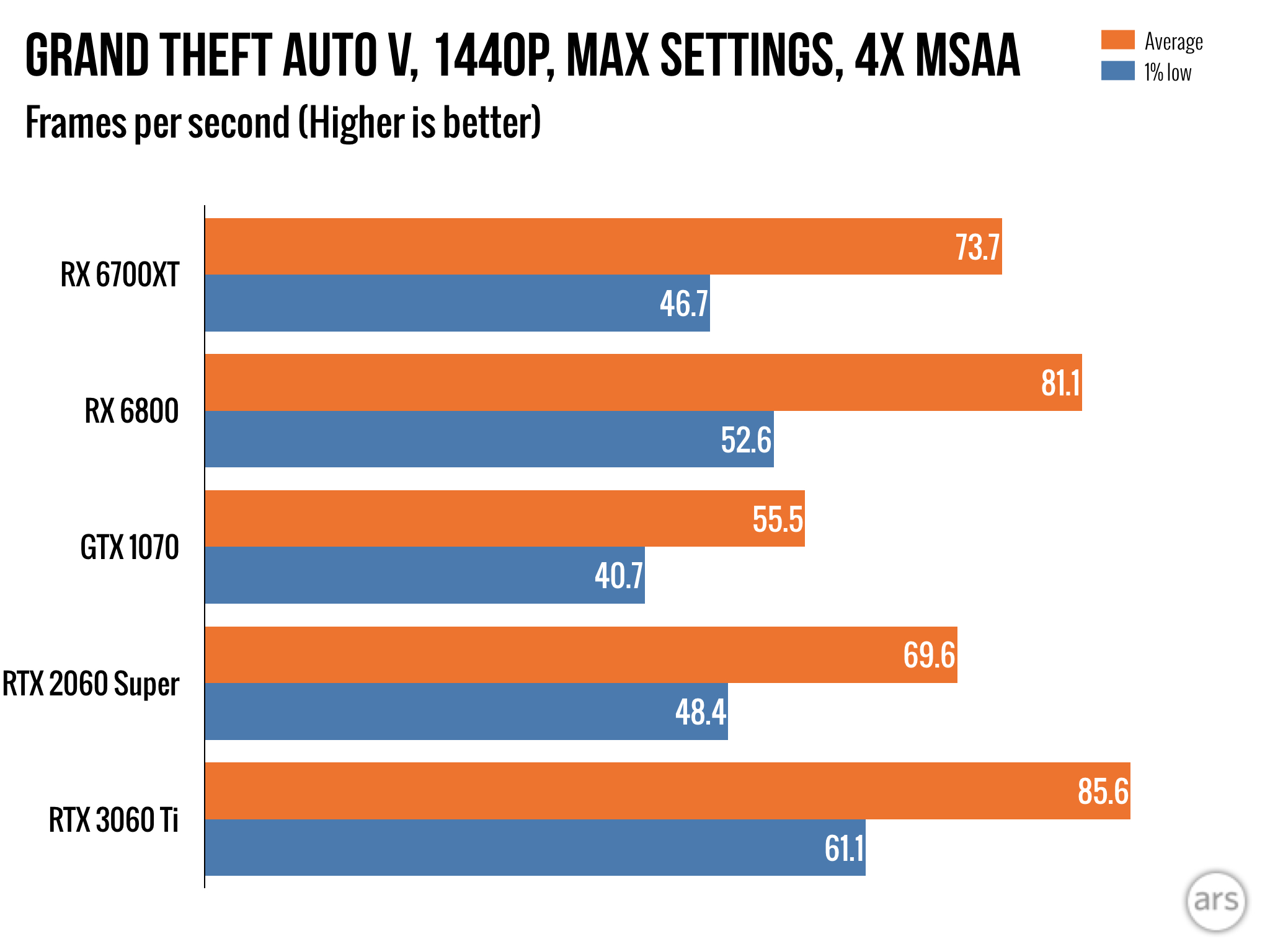

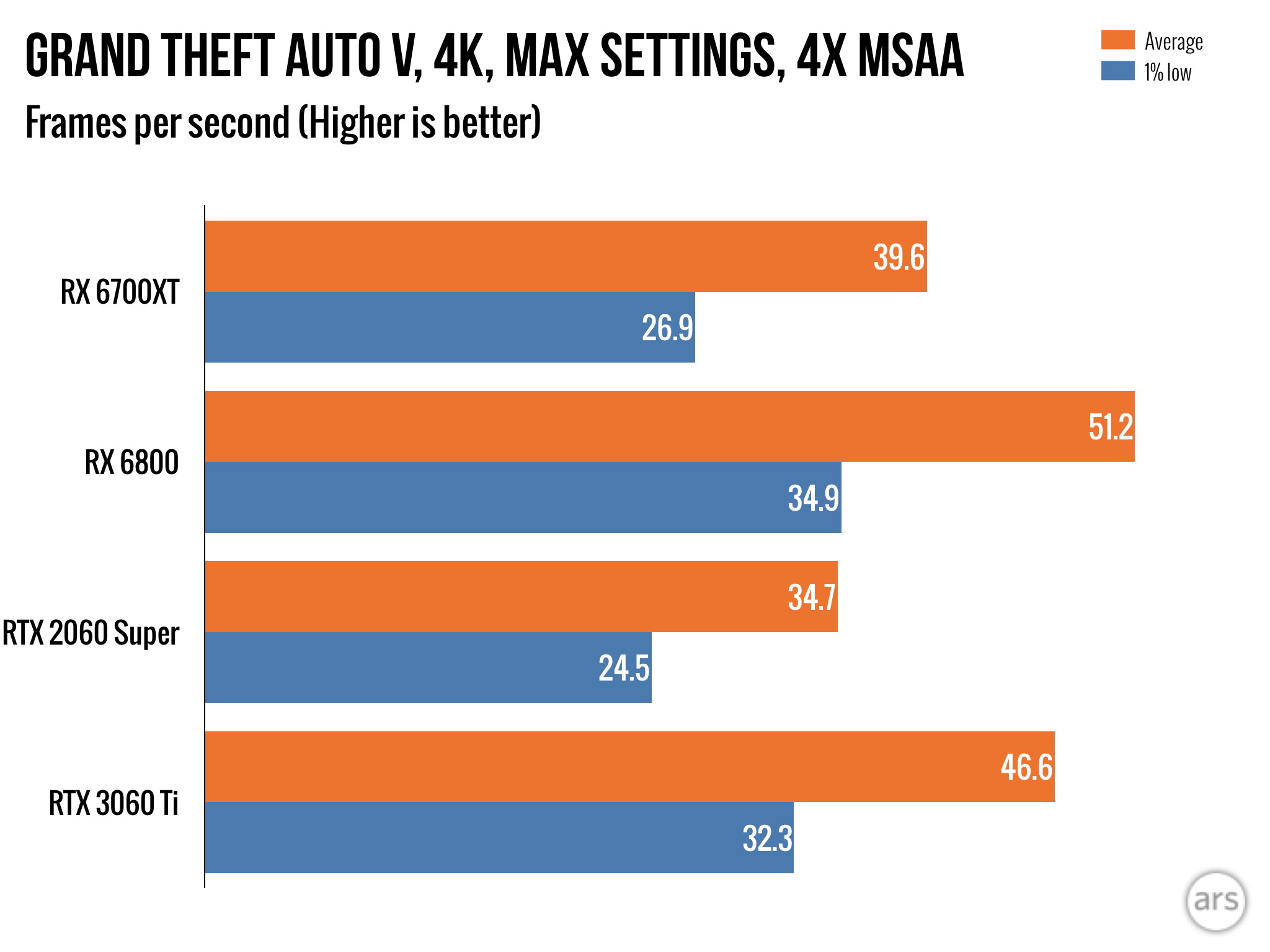

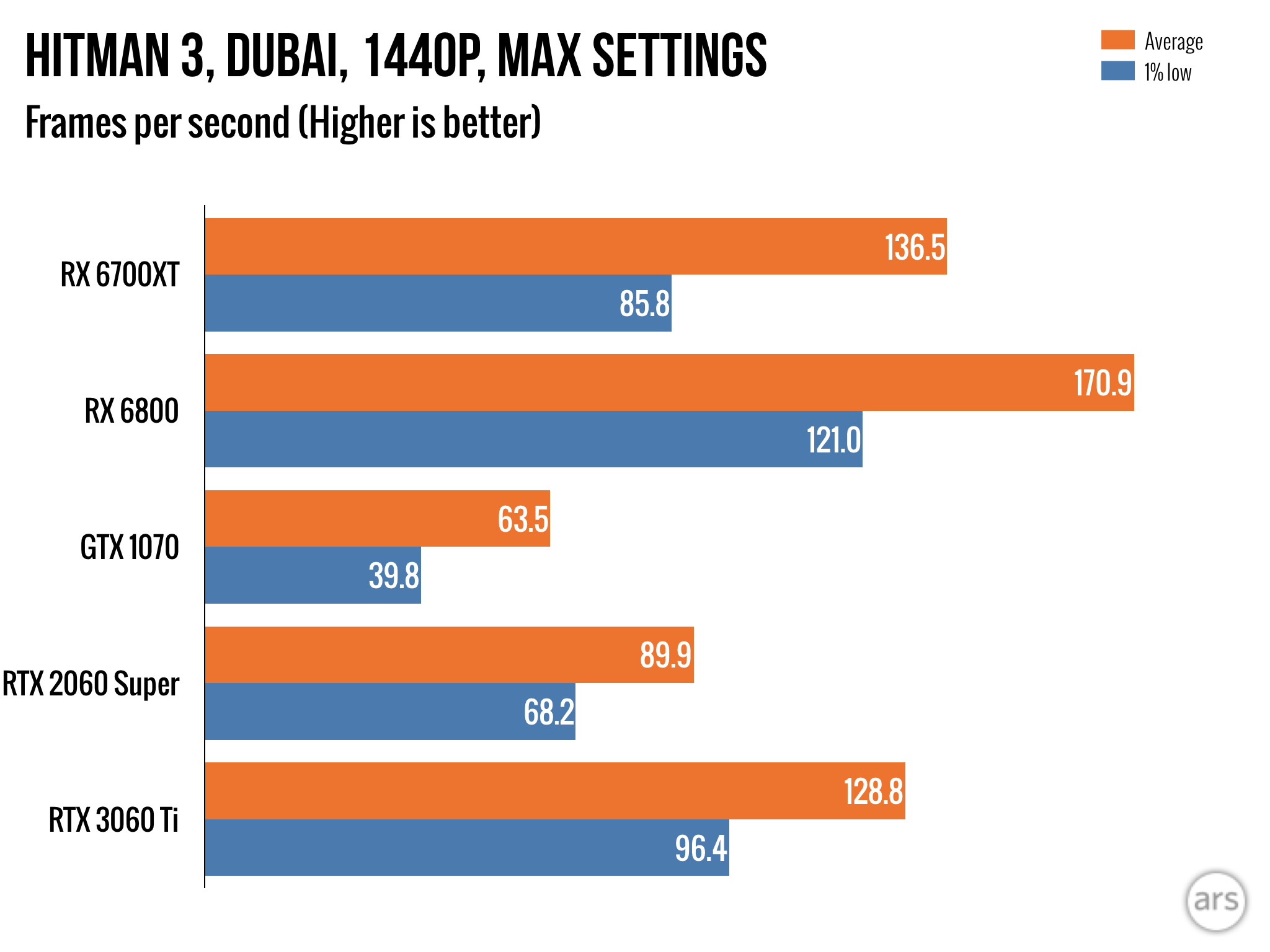

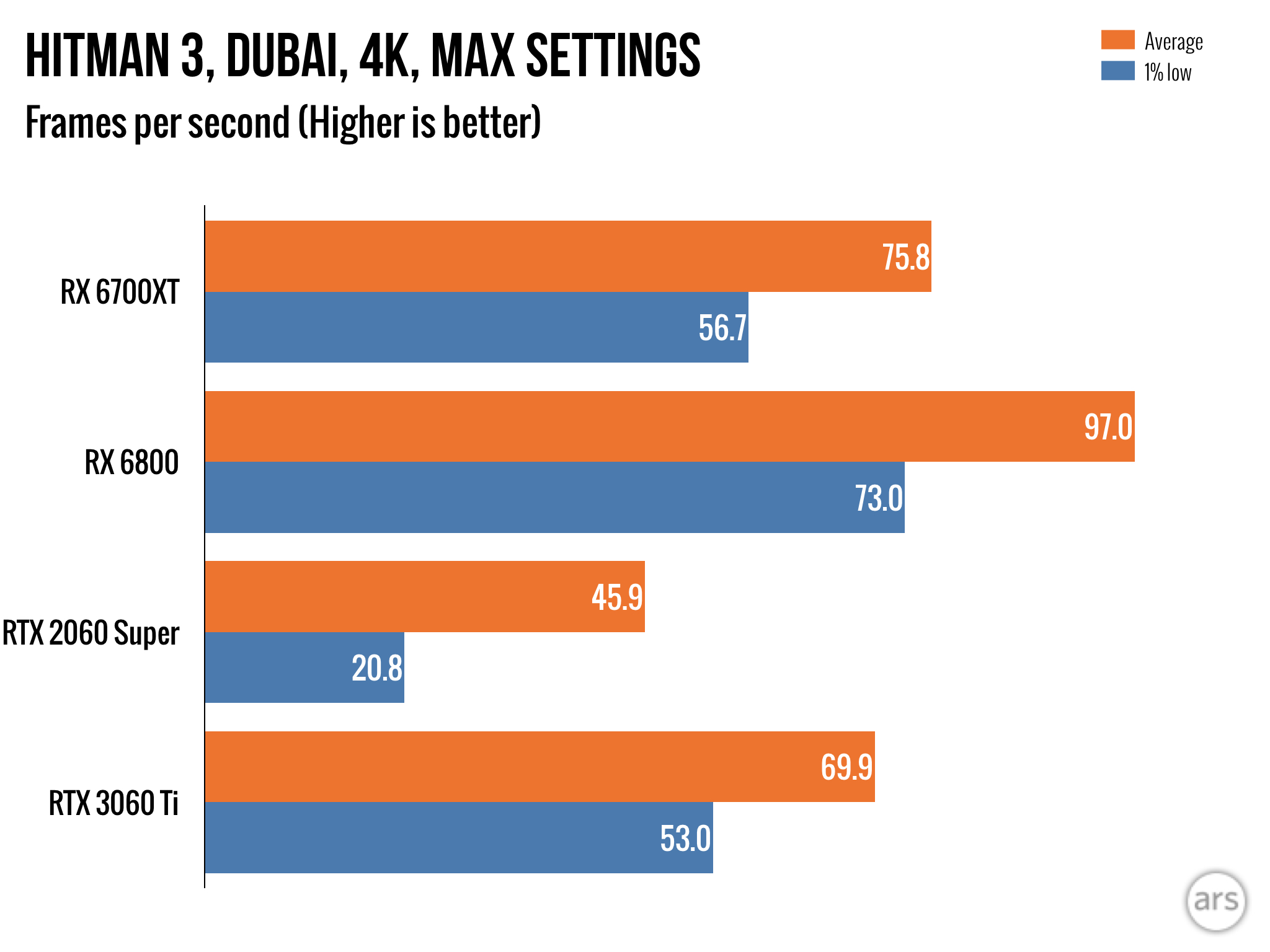

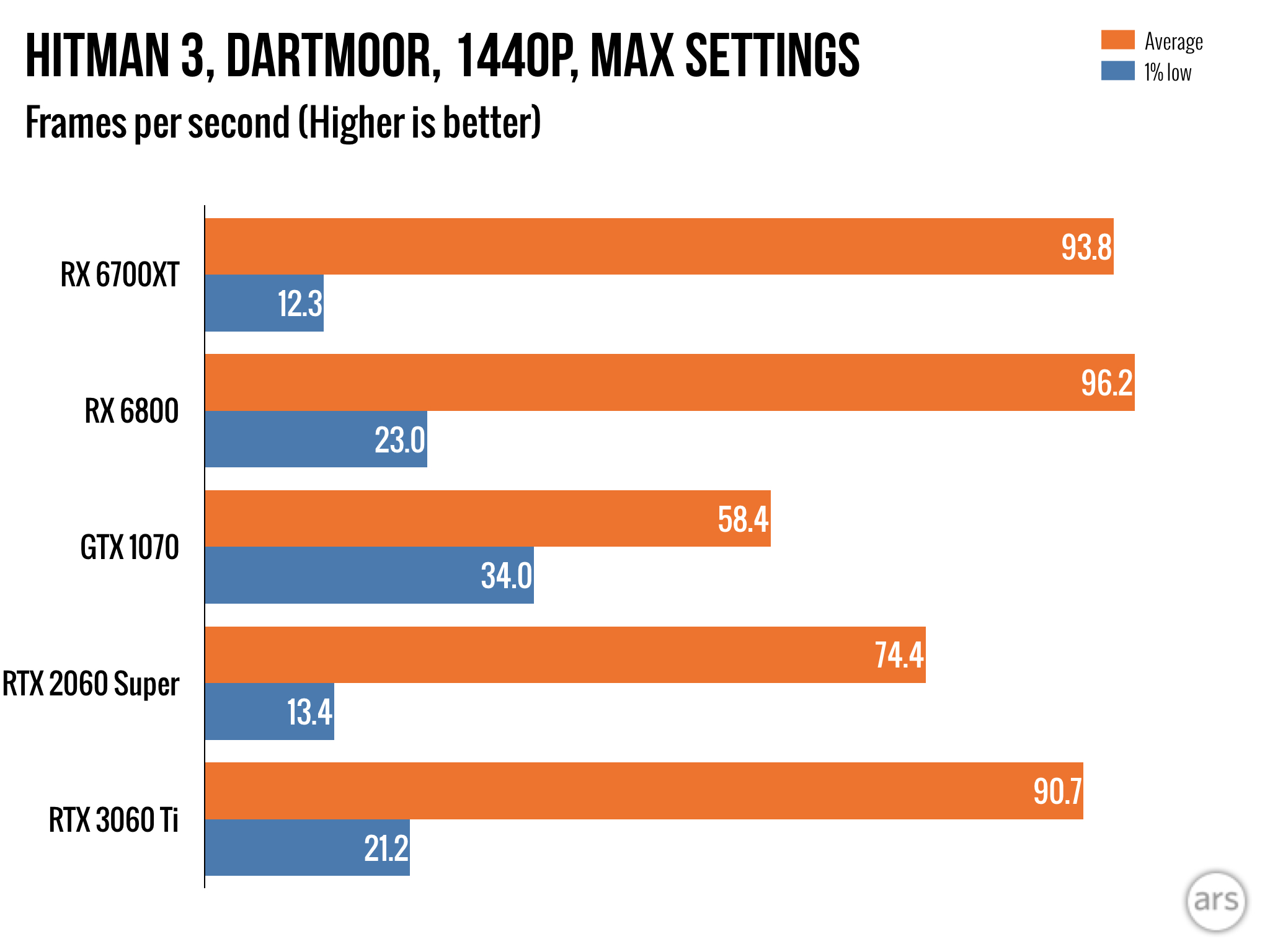

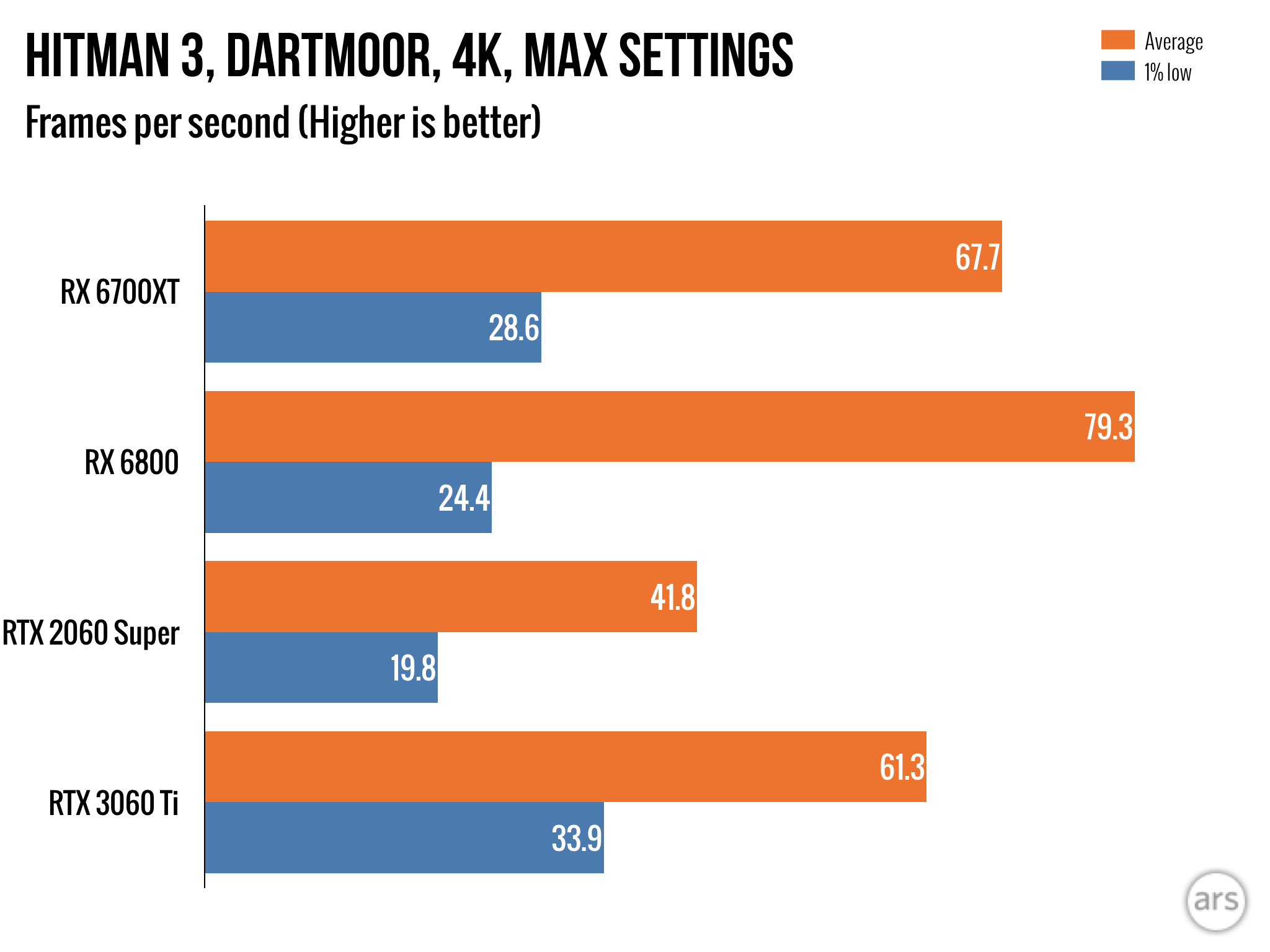

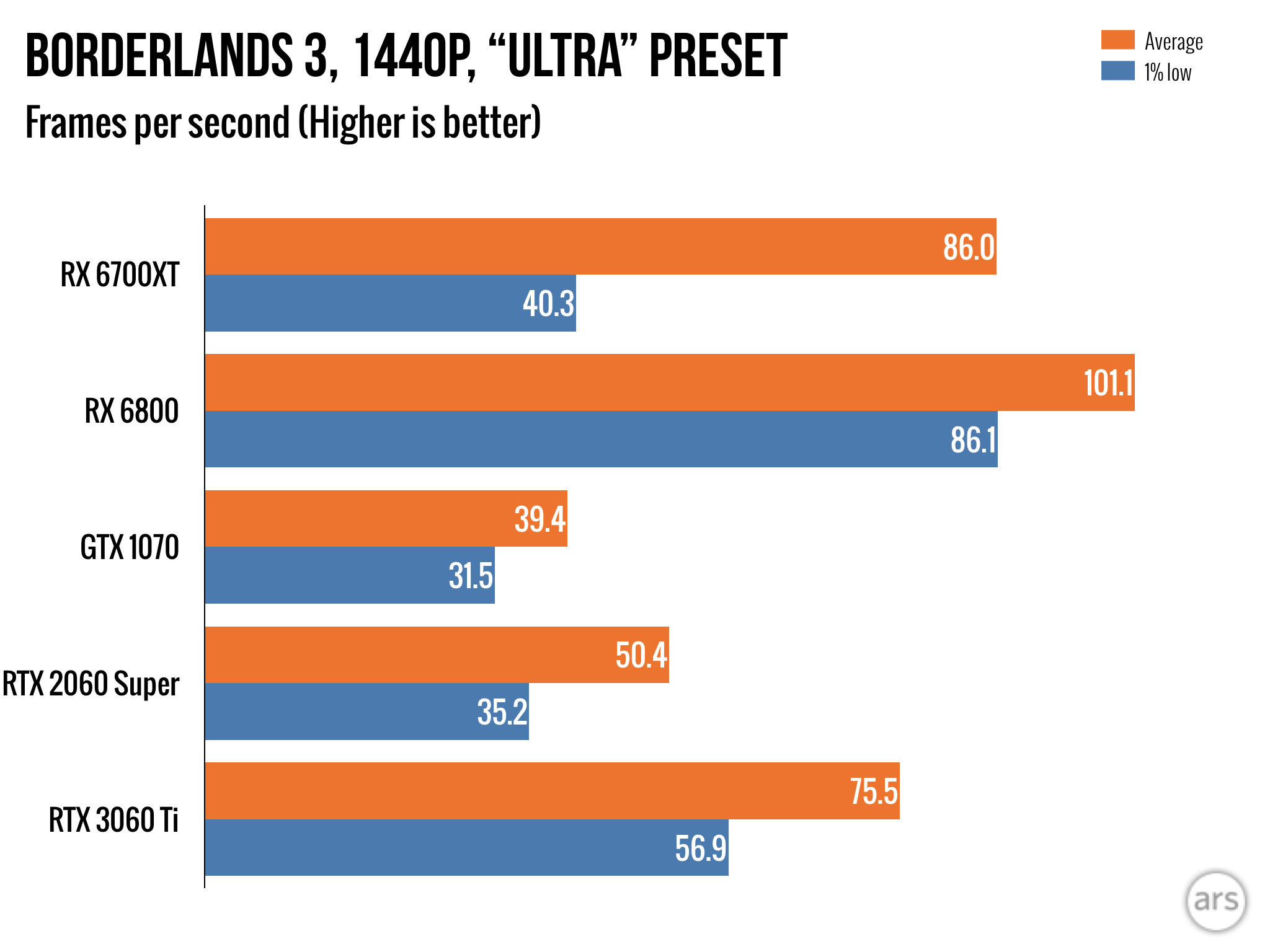

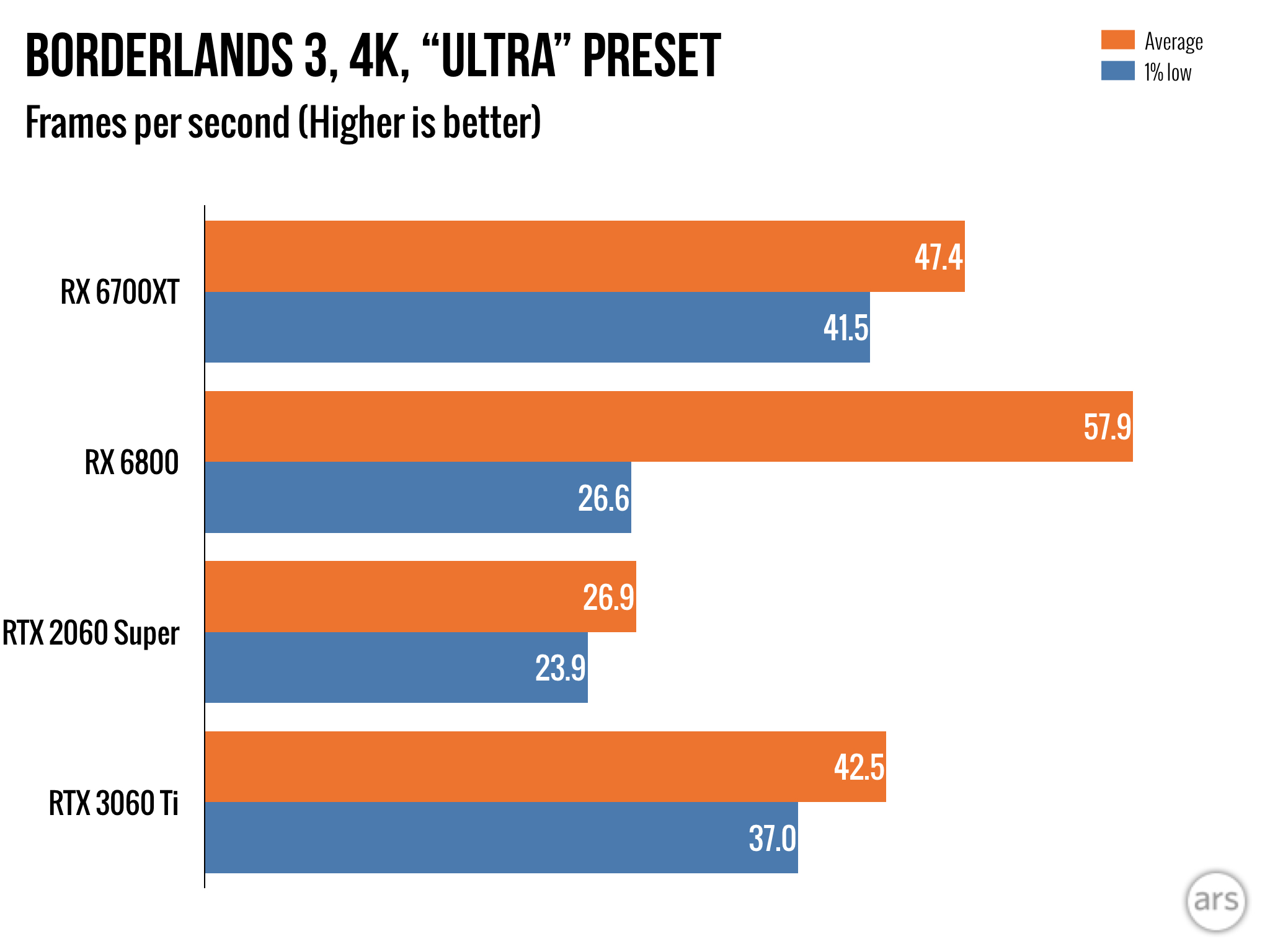

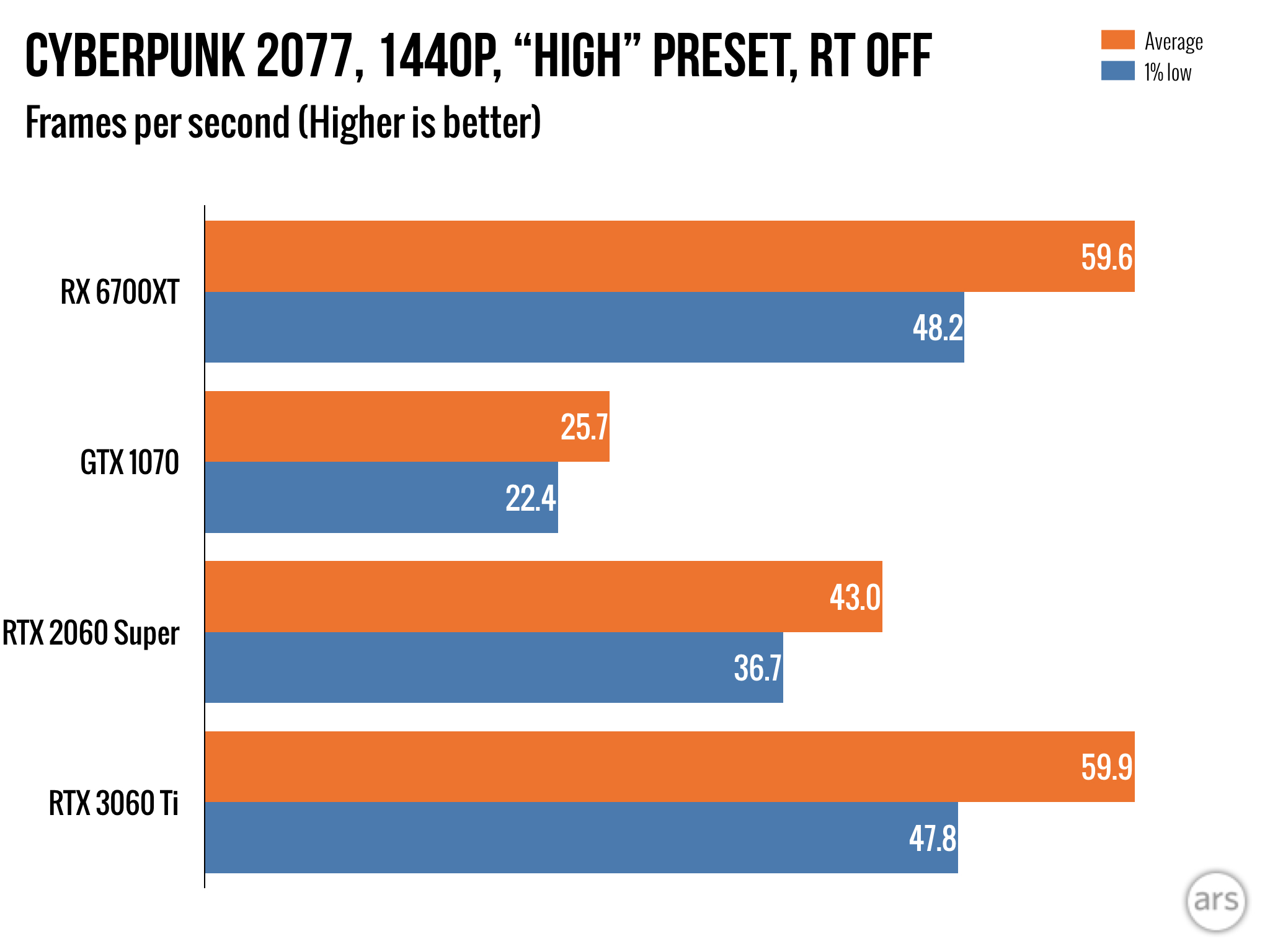

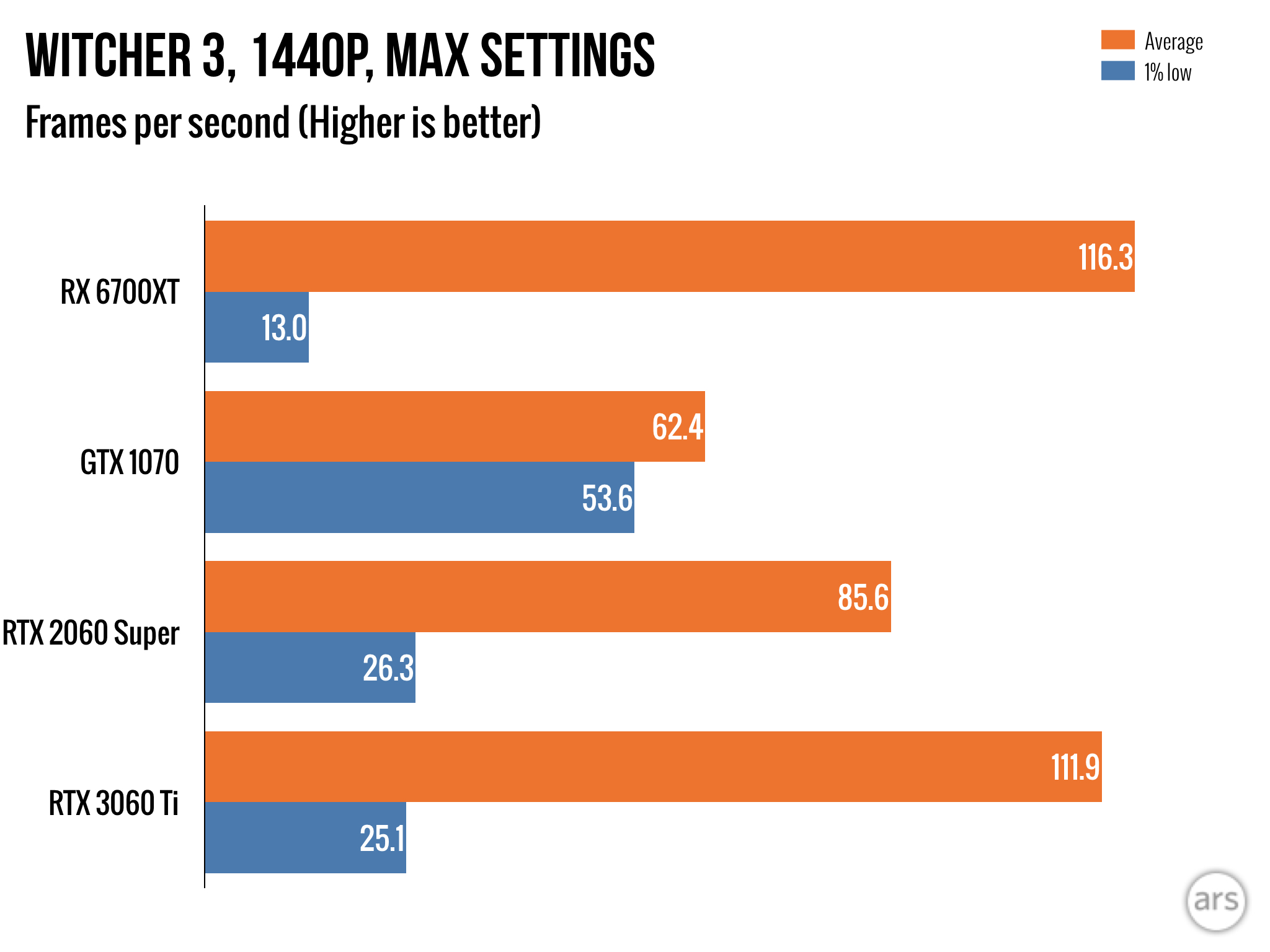

AMD would probably prefer that we compare the 6700XT to Nvidia's more recent $329 offering, the RTX 3060, and if those are the only two cards in stock, and we're not measuring store prices, the conversation tilts further in AMD's favor. As I reported last month, the RTX 3060 and 2019's RTX 2060 Super are neck and neck performance-wise, and you're more likely to have an RTX 2060 Super to compare this to, so I've put it in my benchmarks. My charts also include 2016's GTX 1070 as a "baseline" card and last year's AMD RX 6800. I'm using the latter to help you potentially measure the curve of what a hypothetical RX 6700 (non "XT") could look like in terms of stepping down from one model to the next.

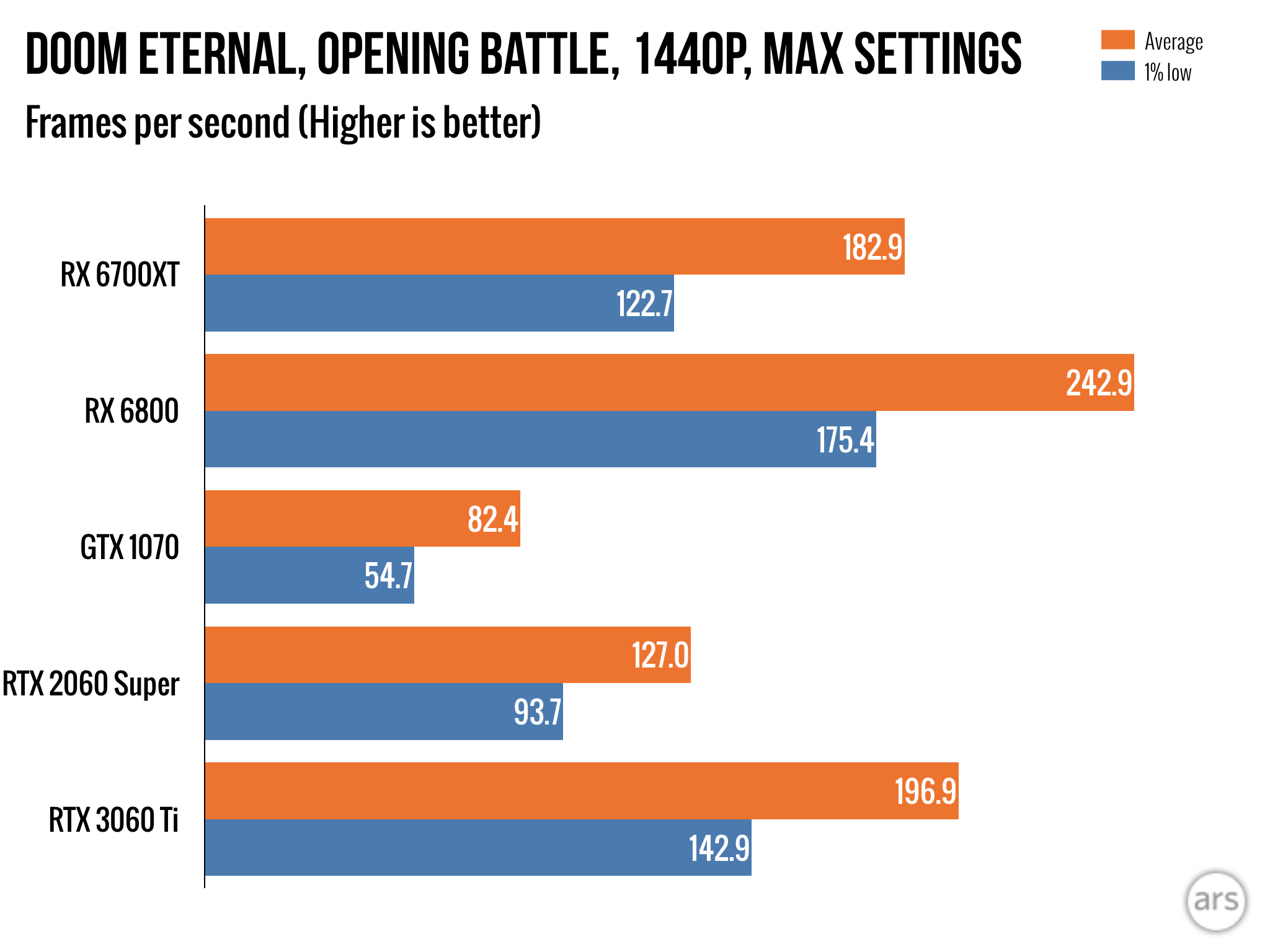

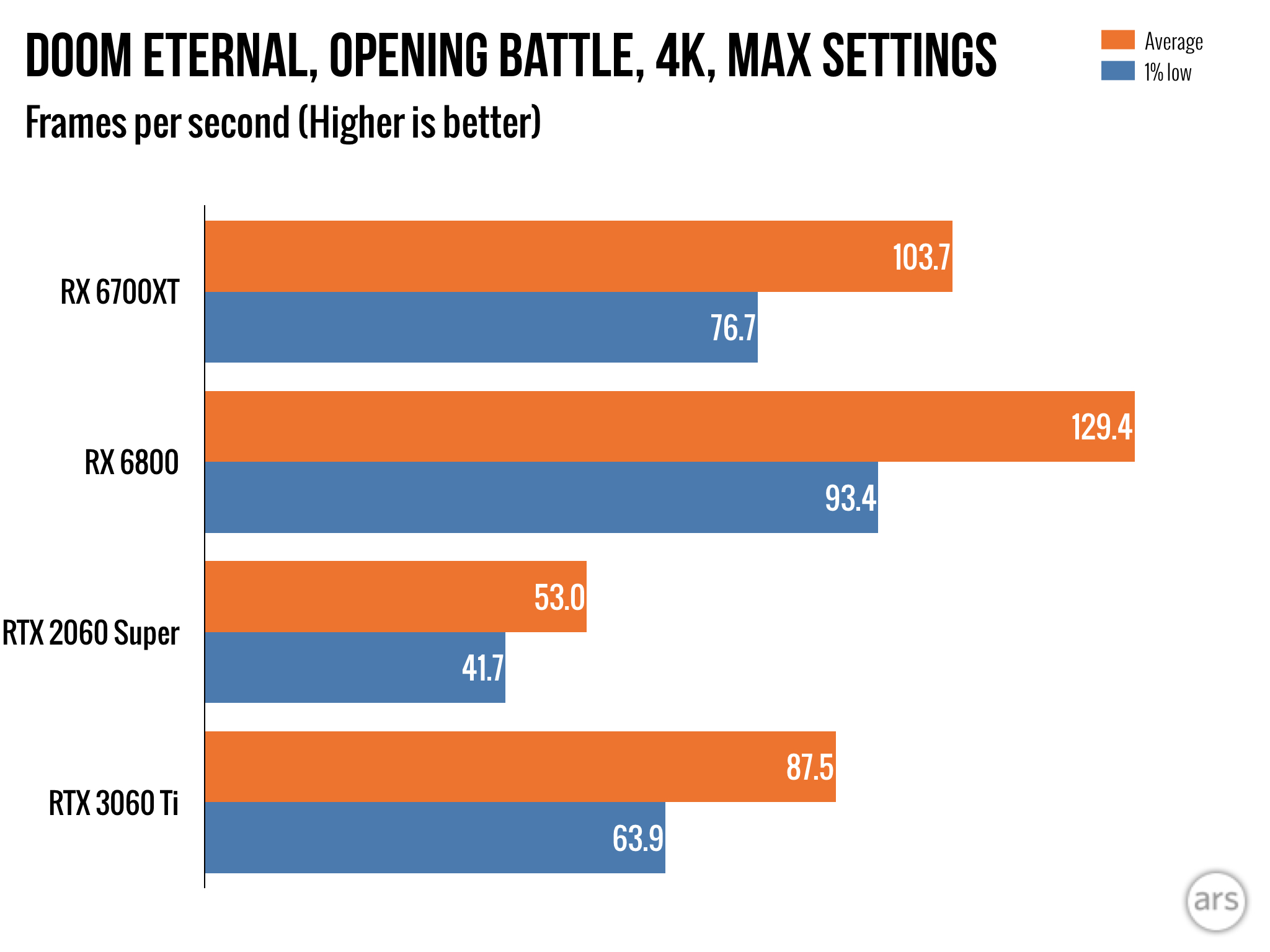

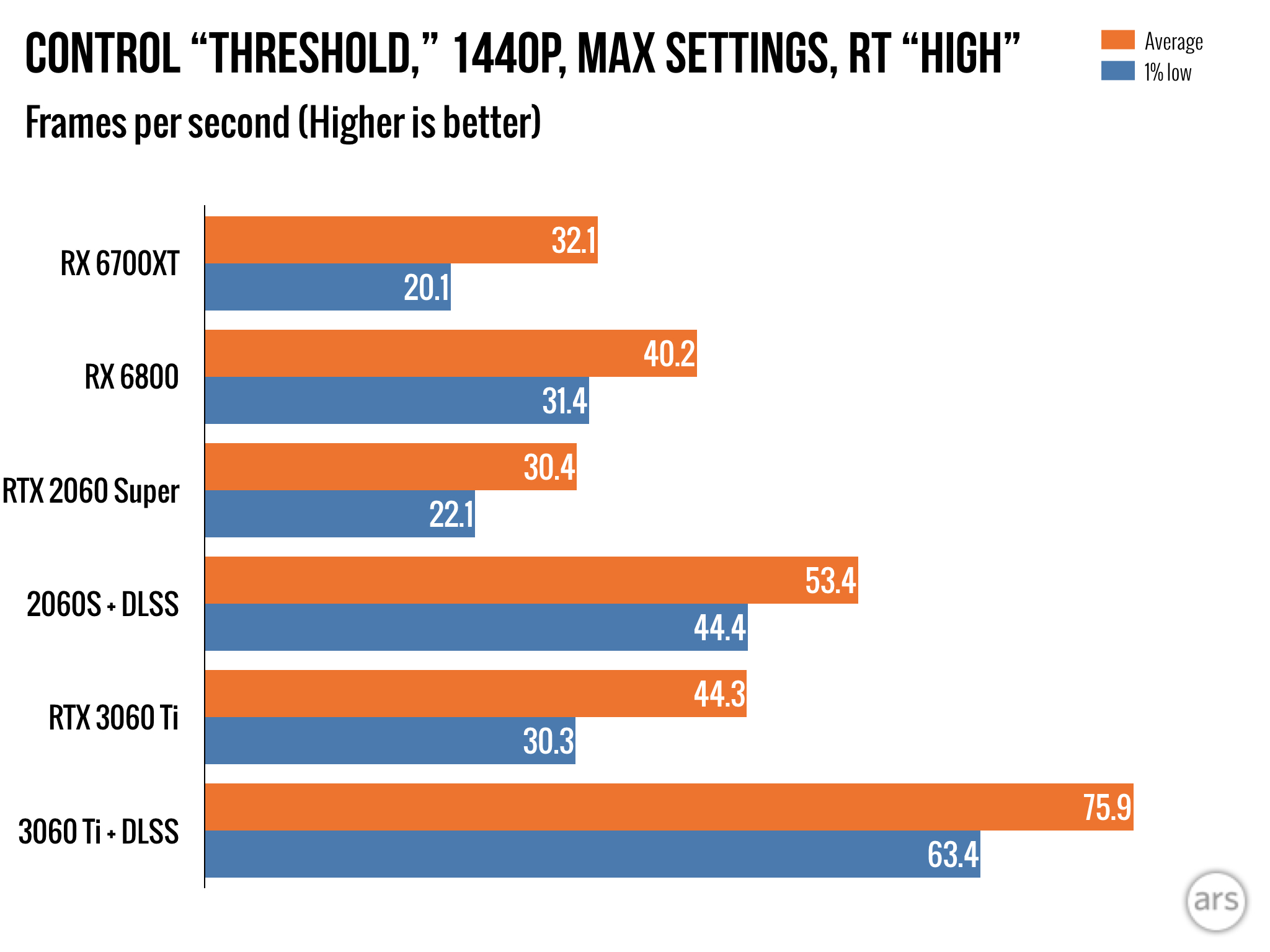

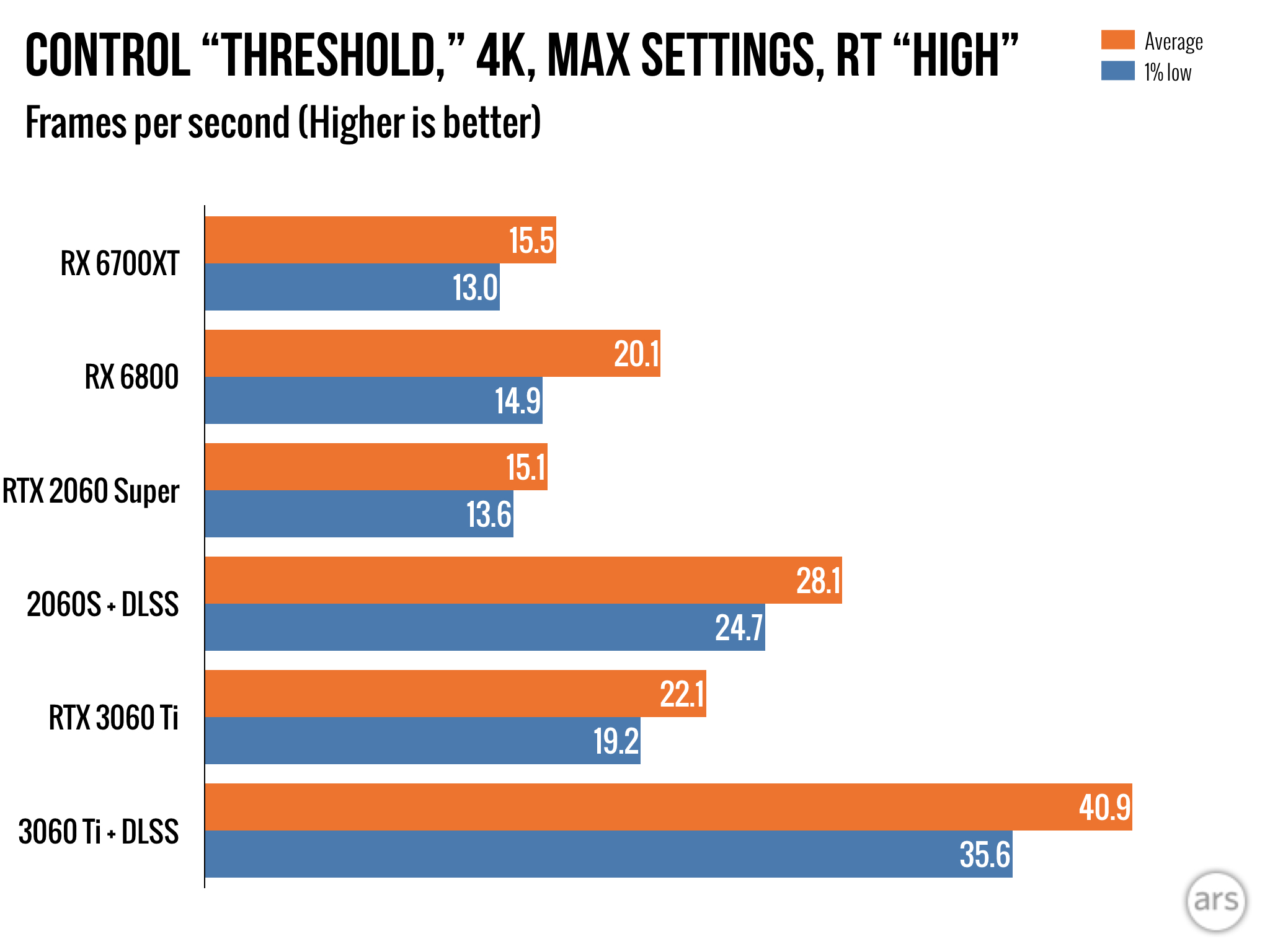

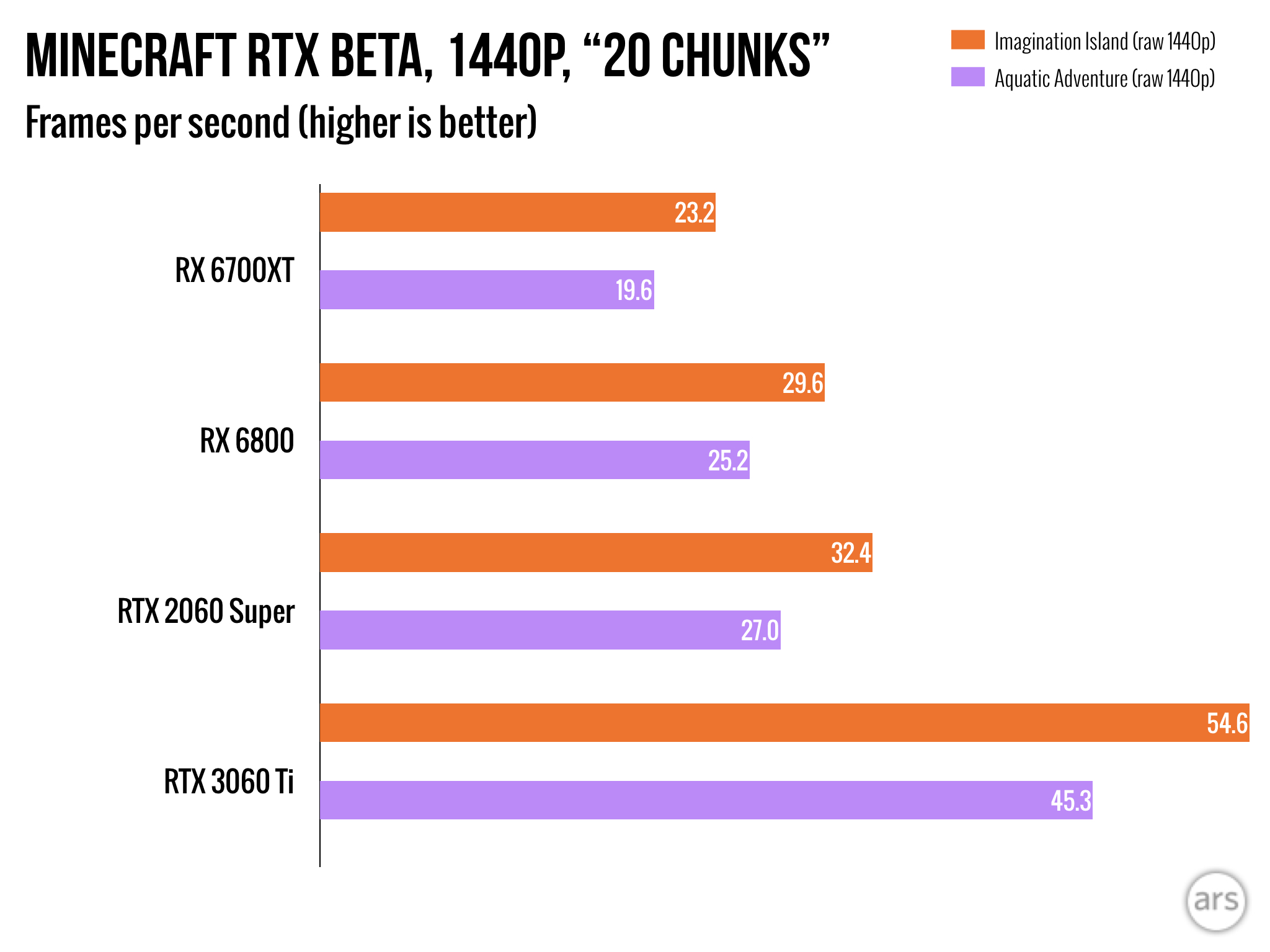

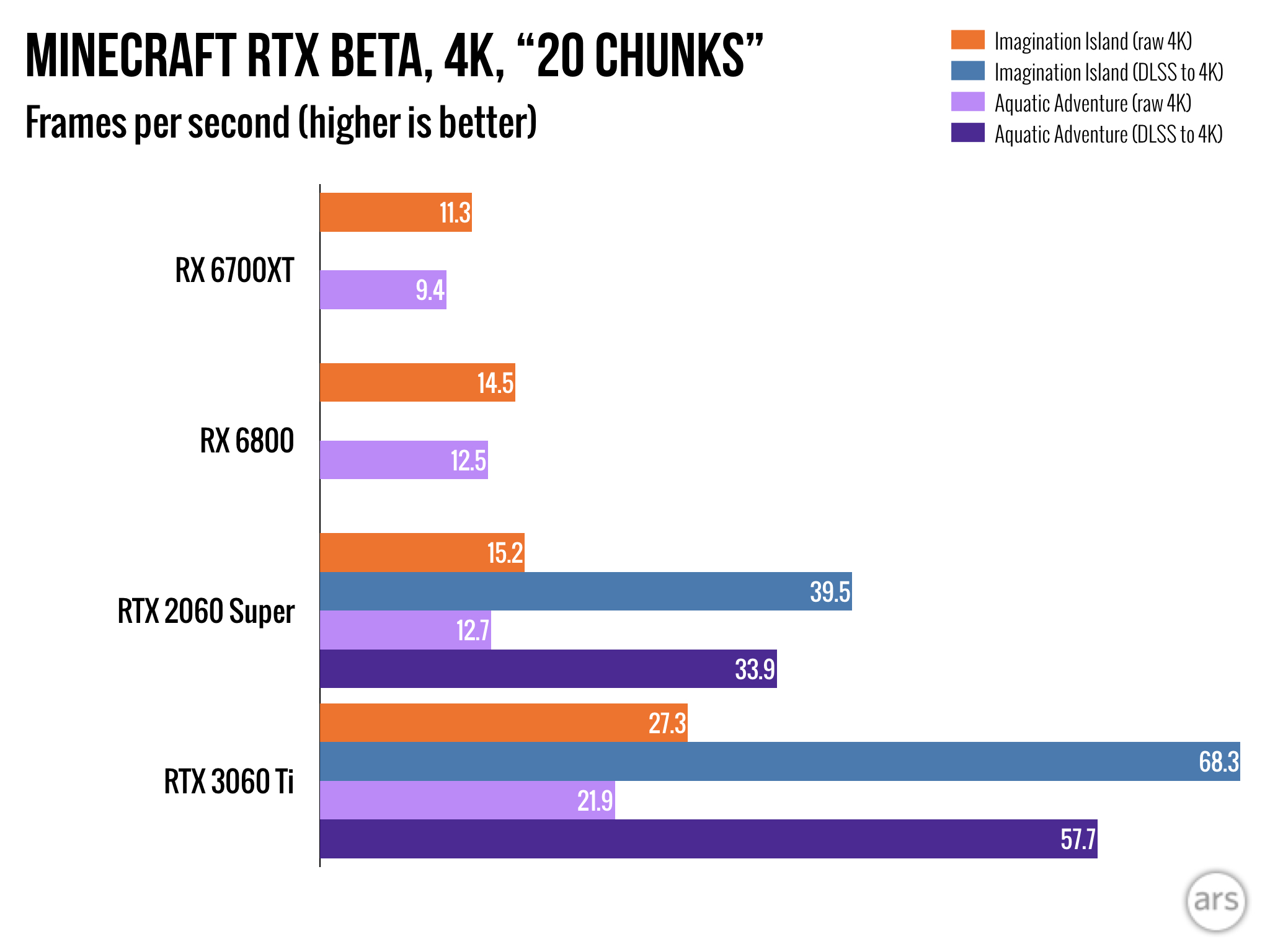

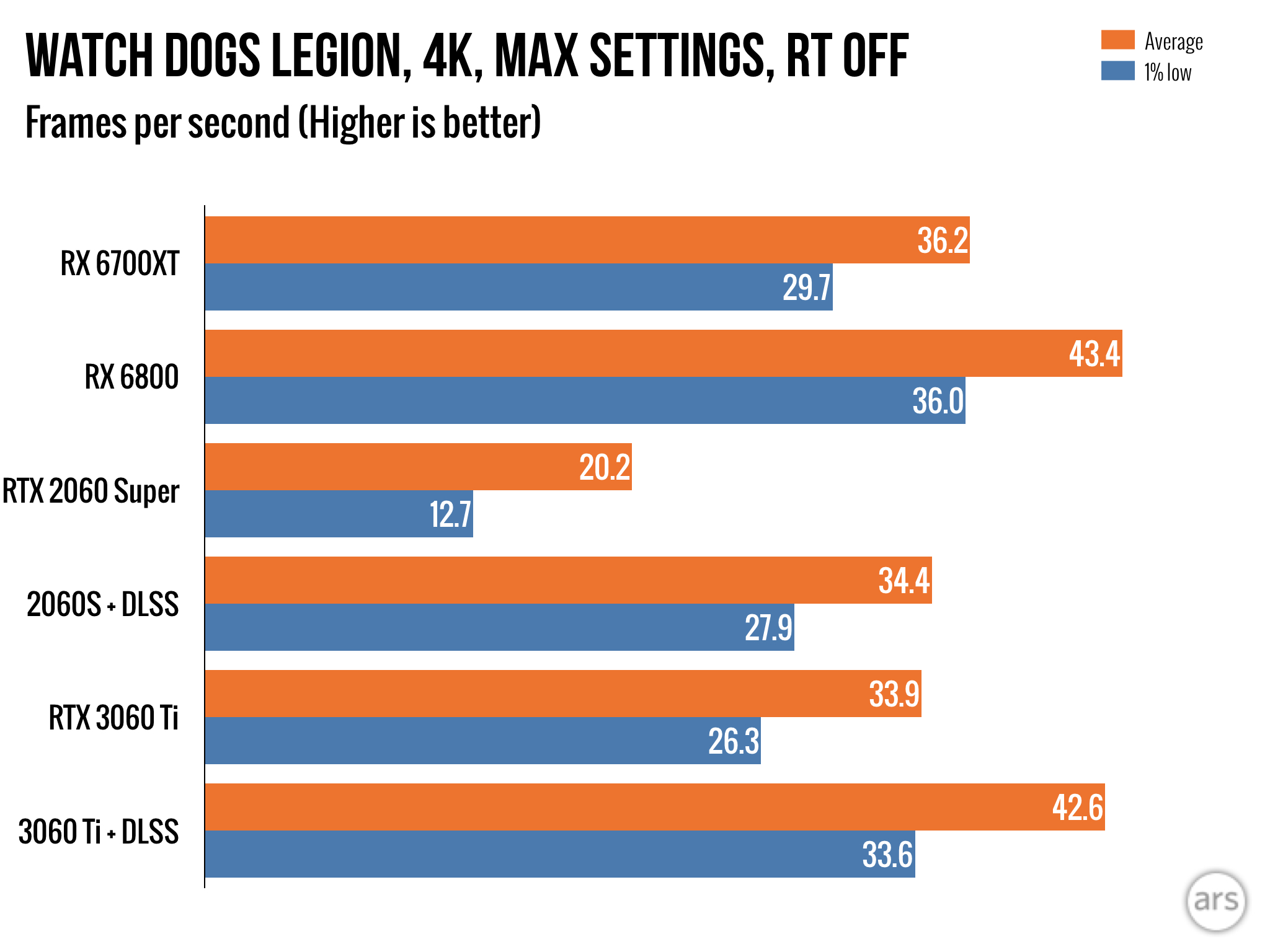

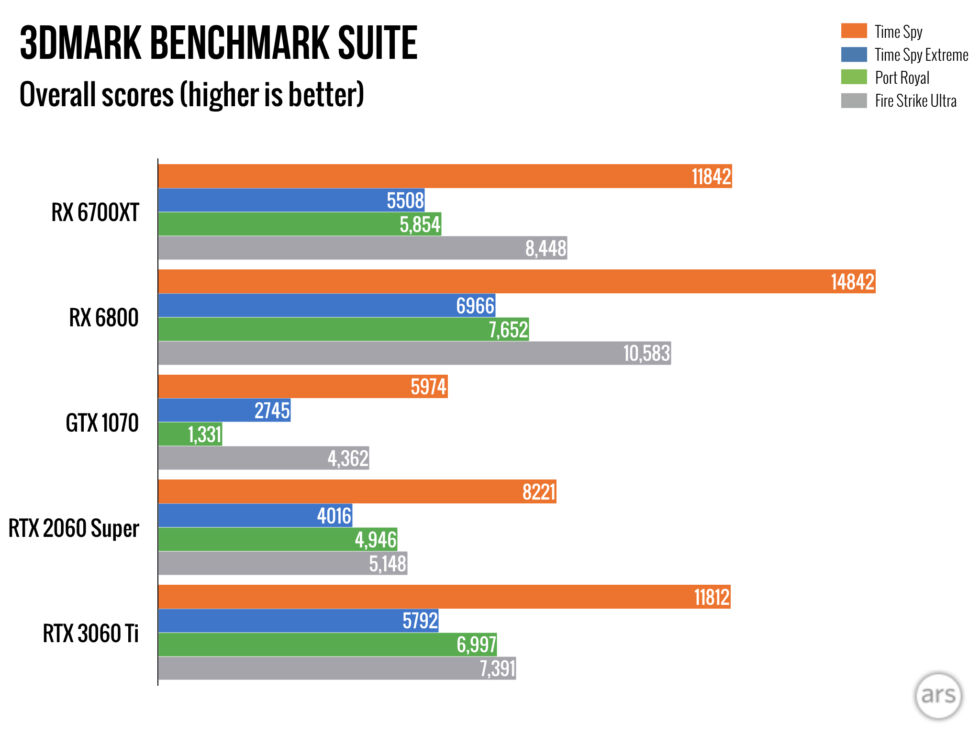

The "1% low" bar in most of these charts represents the worst FPS you can expect 1% of the time. It's arguably the quickest way I can clarify whether a particular test or GPU/game combo comes with frame time spikes. The closer that 1% value comes to the average, the more stable the refresh rate.

The "1% low" bar in most of these charts represents the worst FPS you can expect 1% of the time. It's arguably the quickest way I can clarify whether a particular test or GPU/game combo comes with frame time spikes. The closer that 1% value comes to the average, the more stable the refresh rate. This gallery is mostly captionless to make it easier to read the charts; if a caption gets in the way, click the gallery to make it full-screen.

This gallery is mostly captionless to make it easier to read the charts; if a caption gets in the way, click the gallery to make it full-screen. This "opening battle" is a highly variable test of me playing through an opening sequence. It's less reliable than a firmly repeatable benchmark, but it's here for your consideration about how the GPUs handle real-time gameplay.

This "opening battle" is a highly variable test of me playing through an opening sequence. It's less reliable than a firmly repeatable benchmark, but it's here for your consideration about how the GPUs handle real-time gameplay.

New to our benchmark suite: Hitman 3, which is a mildly updated version of IO Interactive's long-running Hitman engine. The new "Dubai" test primarily pushes GPUs with reflection effects.

New to our benchmark suite: Hitman 3, which is a mildly updated version of IO Interactive's long-running Hitman engine. The new "Dubai" test primarily pushes GPUs with reflection effects.

Hitman 3's Dartmoor test, meanwhile, is a beast of particle and fabric effects, and every GPU struggled with the workload in terms of holding a firm frame rate.

Hitman 3's Dartmoor test, meanwhile, is a beast of particle and fabric effects, and every GPU struggled with the workload in terms of holding a firm frame rate.

As a very, very unoptimized game, Cyberpunk 2077 lands in here as a curio, and I opted not to flex its ray tracing or DLSS modes.

As a very, very unoptimized game, Cyberpunk 2077 lands in here as a curio, and I opted not to flex its ray tracing or DLSS modes. This is a rigid walk through the game's Novigrad section. The 6700XT really struggled to lock a consistent frame rate here for some reason.

This is a rigid walk through the game's Novigrad section. The 6700XT really struggled to lock a consistent frame rate here for some reason.

All of this article's benchmarks were conducted on my standard Ars testing rig, which sports an i7-8700K CPU (overclocked to 4.6GHz), 32GB DDR4-3000 RAM, and a mix of a PCI-e 3.0 NVMe drive and standard SSDs.

Generally, tests of gaming software at 4K resolutions do the best job of demonstrating a GPU's full potential for your system, even if you're not planning to play games at 4K resolutions. Setting a game's resolution to 4K does a better job of taking a CPU's impact on a game out of the equation for these measurements, at which point you should check percentage differences between my tests and guess accordingly. The same goes for the overkill I slap onto each benchmark's settings, which I usually set to either the highest possible or next-highest setting—and in your actual gameplay, it's generally better to turning off certain expensive effects, which will result in higher frame rates than seen here (often with negligible hits to how your favorite games look in action).

Still, AMD has recently insisted that its GPUs are tuned to crank at 1440p resolution, so I've taken the company's reps at their word and included 1440p tests for every benchmark above (albeit with overkill graphics settings). Sure enough, that sales pitch checks out to some extent, especially when comparing the 6700XT directly to the 3060 Ti.

No answer to super sampling?

As far as a tale-of-the-tape goes, the RX 6700XT leads the RTX 3060 Ti in categories like core clock speed (2,581 MHz vs. 1,750 MHz), memory bandwidth (16Gbps vs. 14Gbps), VRAM (12GB vs. 8GB, both GDDR6), and having an additional, whopping load of 96MB L3 cache where Nvidia's card has none. But Nvidia's option has nearly double the shading units (which they call CUDA cores), along with a 256-bit memory bus compared to the 6700XT's 192-bit bus. Each card has its own dedicated allocation of ray tracing cores, though Nvidia's versions are already in their "third-generation" form, while AMD doesn't have an answer for Nvidia's block of DLSS-fueling tensor cores.

Depending on the testing scenario, that's no small drawback for AMD's side of the equation. DLSS has absolutely matured since its uneven splash with the RTX 2000 series of GPUs, and it does a nimble job of upscaling 1080p-ish resolutions to 1440p, or 1440p-ish resolutions to 4K. At its worst case, DLSS-upscaled images can look smudgy or ghosty in rare occasions but rarely worse than your average temporal anti-aliasing (TAA) implementation. And unlike TAA, DLSS can work wonders in creating truly smoothed diagonal lines and other high-fidelity details. (See more on DLSS' maturation in my RTX 3060 review.)

Why am I going on about DLSS in an AMD card review? Because AMD's 2020 promise of "FidelityFX Super Resolution," an upscaling system meant to directly counter Nvidia's DLSS successes, has yet to materialize. "We have released our alpha build [of Super Resolution] to our partners," AMD said during a recent press conference, and the company pledged to keep this upscaling system "an open platform so integration is easy and fast" for interested game developers. But exactly how does it work? How far along is this alpha version? And can it possibly compete with Nvidia's system, which leans specifically on a block of dedicated processing cores? AMD still isn't saying.

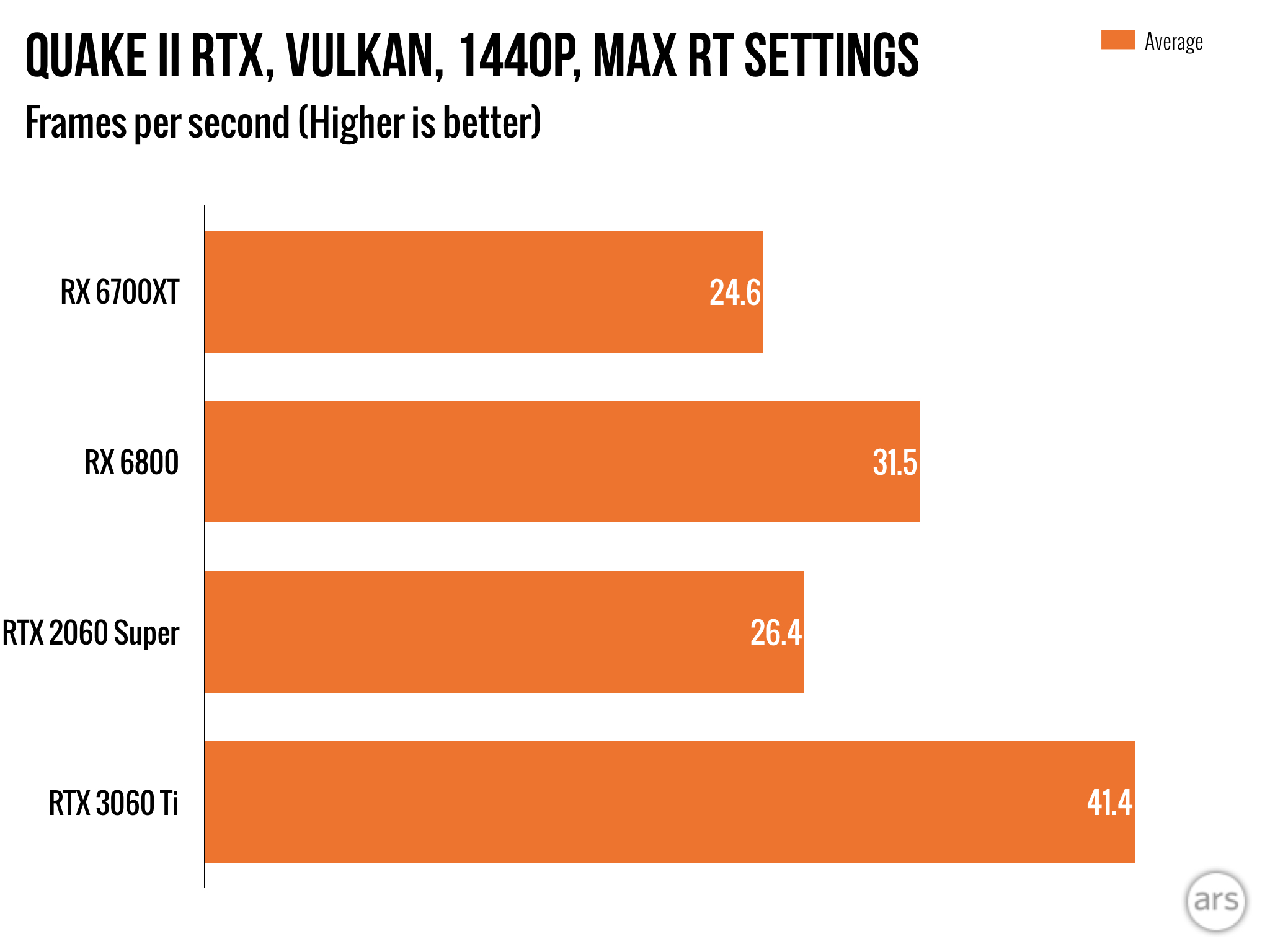

This review's ray tracing-intensive benchmarks.

This review's ray tracing-intensive benchmarks.

DLSS doesn't look very good when upscaling from 960p-ish to 1440p, so I disabled DLSS for this one.

DLSS doesn't look very good when upscaling from 960p-ish to 1440p, so I disabled DLSS for this one.

Quake II RTX has a convenient spot to stress-test its bonkers ray tracing engine, so I parked my save file in that spot and checked the frame rate on various GPUs. Disable the RT mode, and the frame rate immediately climbs to over 700 fps on each of these GPUs. (Take that, Voodoo 2 GPU!)

Quake II RTX has a convenient spot to stress-test its bonkers ray tracing engine, so I parked my save file in that spot and checked the frame rate on various GPUs. Disable the RT mode, and the frame rate immediately climbs to over 700 fps on each of these GPUs. (Take that, Voodoo 2 GPU!)

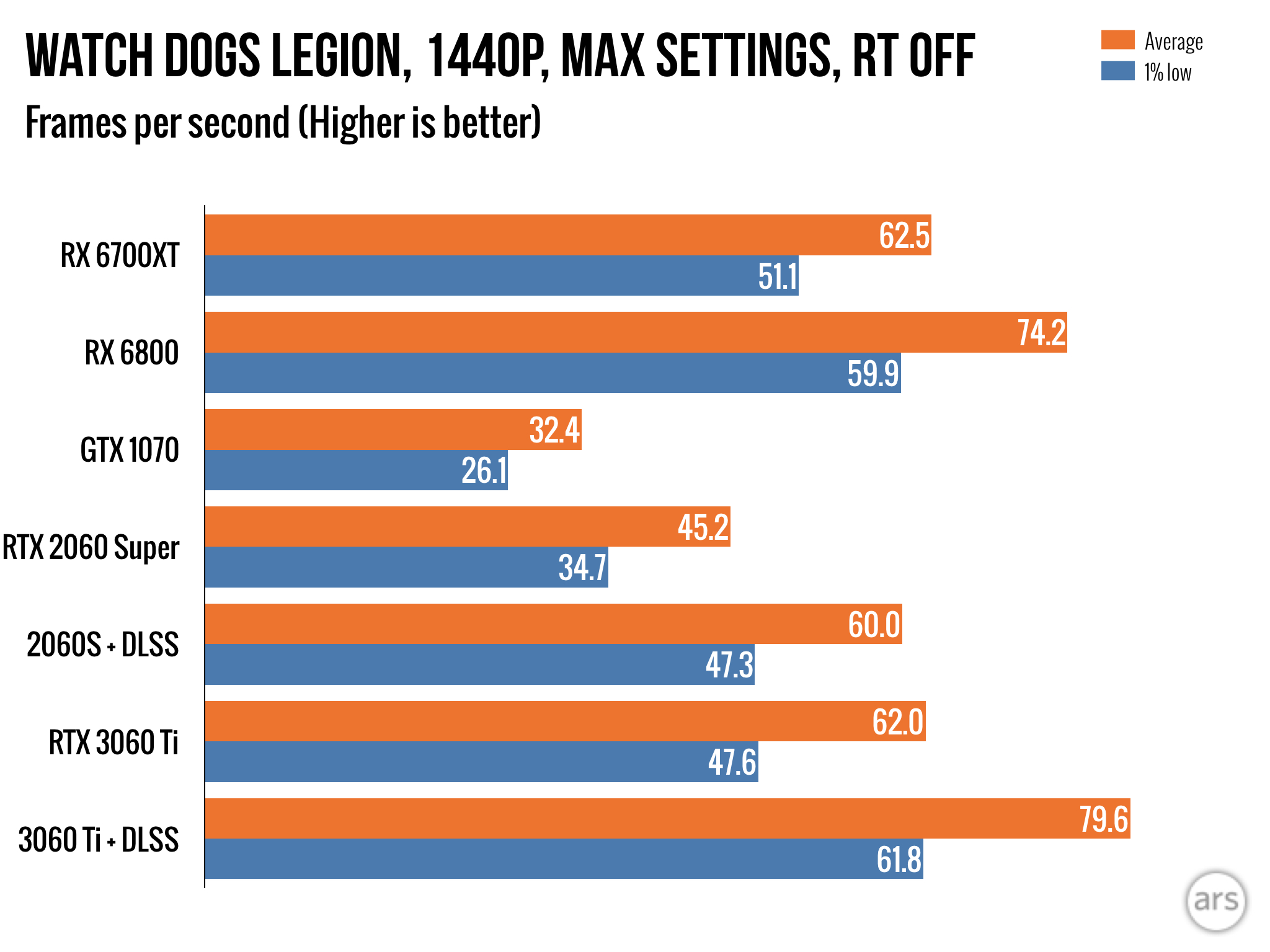

For those who'd rather play Watch Dogs Legion with more frames and fewer rays, here's a non-RT series of benchmarks.

For those who'd rather play Watch Dogs Legion with more frames and fewer rays, here's a non-RT series of benchmarks. For those who'd rather play Watch Dogs Legion with more frames and fewer rays, here's a non-RT series of benchmarks.

For those who'd rather play Watch Dogs Legion with more frames and fewer rays, here's a non-RT series of benchmarks.

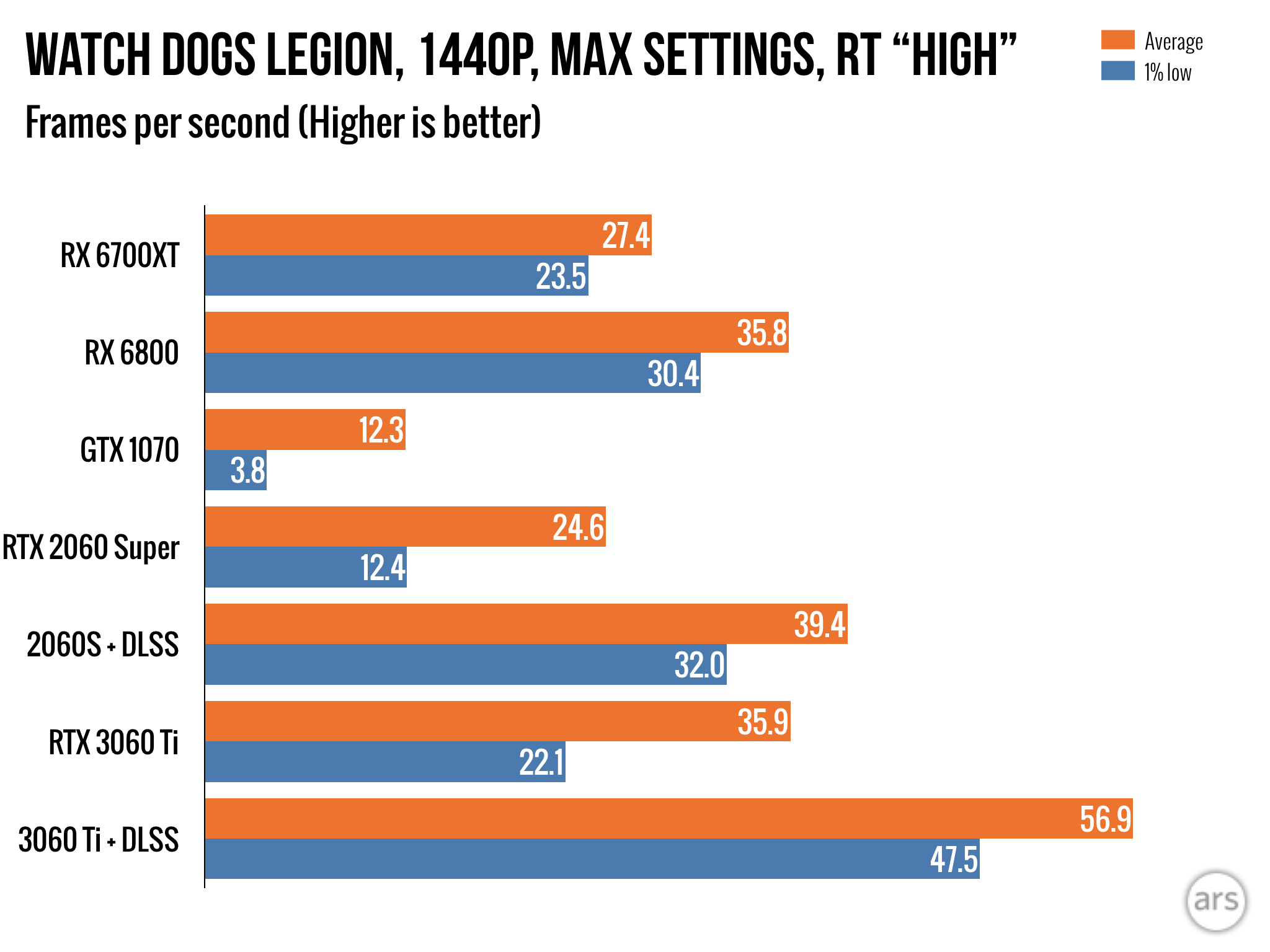

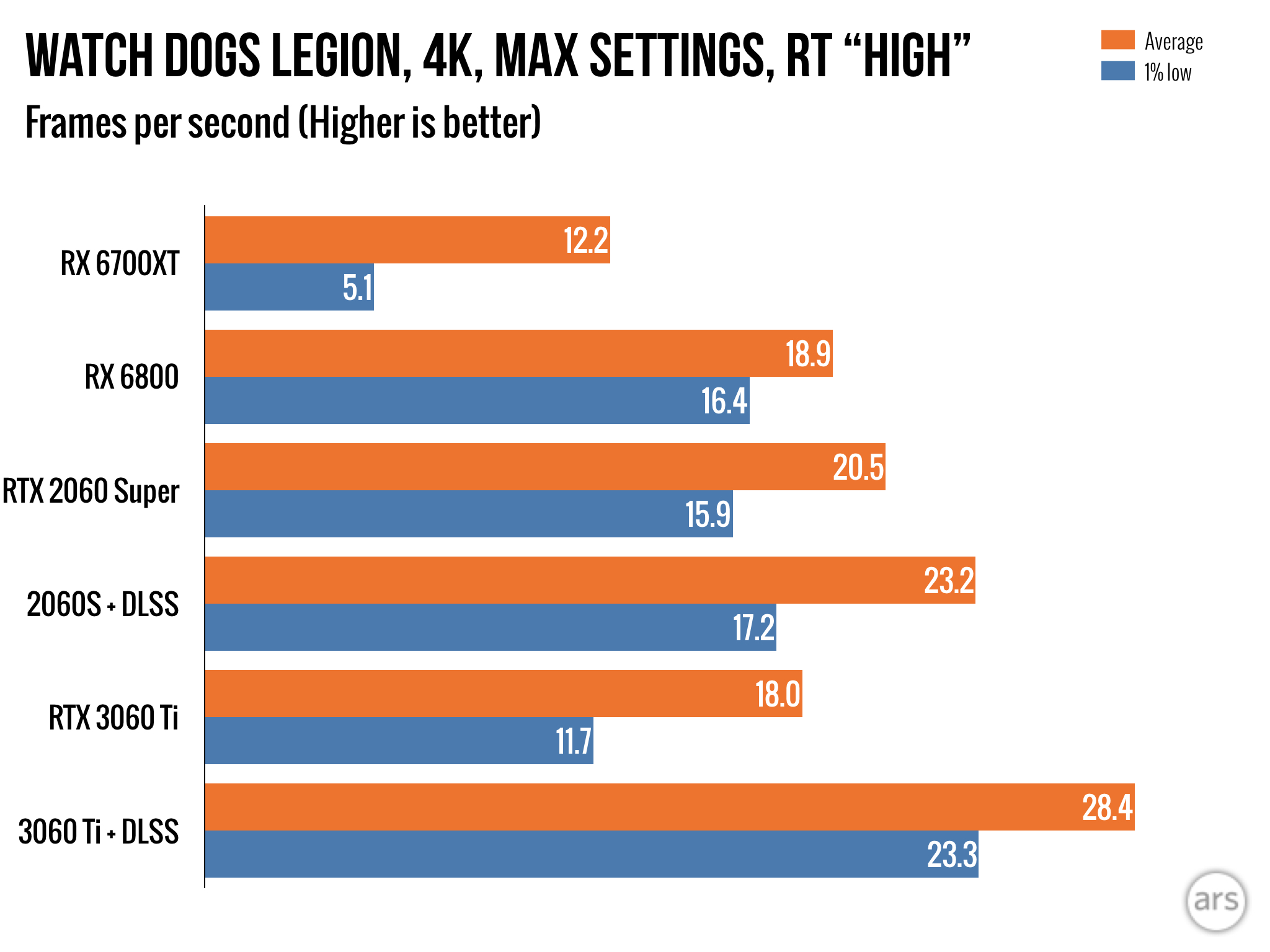

Hence, I've included test results where DLSS has a negligible negative impact on image quality—and in many ways, a positive one. (My Minecraft ray tracing tests, on the other hand, do not include its 960p-to-1440p DLSS option, since that one is too blurry to recommend.) Both AMD and Nvidia are officially on the ray tracing train with their latest GPU generations, as fueled by native implementations within Vulkan and DirectX 12, and you can see the RX 6700XT can muster workable ray tracing performance. Whittle resolutions and settings down, and you can lock the RT modes of Watch Dogs Legion, Control, Minecraft, and Quake II RTX to a 30 fps refresh.

That's not a great RT frame rate for anyone spending upwards of $450 on a GPU, but it's at least a foot in the ray tracing door for anyone who favors AMD for any reason. Still Nvidia's option leads across the board in raw ray tracing performance, when all pixels are equal, and that lead climbs once DLSS enters the equation.

Of course, your GPU interests may have nothing to do with ray tracing, and the benchmarks higher up in the article are a matter of sheer rasterization. In general, you're looking at a coin flip in terms of whether the RX 6700XT will take the lead in a game or not (or whether it's a dead heat). At 1440p resolution, Borderlands 3 and Assassin's Creed Valhalla deliver the clearest AMD wins in this specific showdown, though in the case of Borderlands 3, that lead comes at the cost of much more variable frame rates—as seen in this review's inclusion of a "1% low" frame rate count. For the most part, the RX 6700XT is comparable to Nvidia's cards in frame rate variance, but when the card takes a massive lead, it usually doesn't do so in stable fashion.

AMD's proprietary toggles: One's smart, one's so-so

All of those charts, by the way, can move further in AMD's favor if you're in a position to enable AMD Smart Access Memory. This requires owning an RX 6000-series GPU, an AMD Ryzen 5000 series CPU (or "select" CPUs in the Ryzen 3000 line), and an AMD 500 series motherboard. Within such an environment, AMD says it can guarantee a higher-bandwidth pipeline between your CPU and the entirety of your GPU's VRAM.

And considering how well AMD's Ryzen line has done in recent years, that's a likely scenario for anyone running a high-end gaming PC. However, I don't have an AMD CPU or compatible motherboard to test these claims, so I'm left staring longingly at a chart provided by AMD that promises 3-16 percent raw frame rate gains in an admittedly small list of 10 games—though these run the gamut between DX11, DX12, and Vulkan. (The gains average out to roughly 8 percent per game.) I hope to go deeper on this feature in the near future and, in particular, to determine whether these frame rate gains also come with more frame time stability.

Nvidia has pitched a similar-sounding concept with RTX IO, though this works by streamlining the pipeline connecting your software's install files to your VRAM. However, we're still waiting to see this take shape in available testing scenarios. For now, points in AMD's column for this one.

I'm not necessarily as bullish about AMD Radeon Boost, a Radeon-exclusive feature that claims to dynamically drop a game's resolution when it detects high-speed motion. It's a variant of the DirectX 12 Ultimate "variable rate shading" (VRS) feature, and it tries to detect first-person action and mouse-camera control before dropping the screen's resolution wherever you aren't necessarily looking. In other words: the center of the image will remain high-resolution, as will your on-screen menus, but peripheral pixels will drop in resolution.

Because AMD's current implementation looks specifically for first-person action, it doesn't seem to trigger in third-person action games. Those are the ones I tend to crave more frames in, as opposed to first-person shooters like Counter-Strike, Apex Legends, Valorant, and Doom Eternal, which are already primed for insane frame rates by default. But a few cursory tests seemed to indicate first-person games did scrape upwards of 20 fps when I toggled the feature, and they did so in ways that were nigh-imperceptible. But those 20 fps came for titles that were already upwards of 165 fps, as opposed to, say, a ray tracing heavyweight. In terms of frame rate percentage, that's not a ton. I'll take any frames I can get, but I'd like to see further maturation of the VRS ecosystem.

Admittedly, a hypothetical recommendation here

As a cut-down RX 6800, the RX 6700XT does a good job ofcoming down $100 in MSRP without feeling like we're losing $100 of performance—and AMD's engineers made some clever calls with its specs juggle on a smaller piece of silicon. (They call it "Little Navi" for a reason; the die size has shrunk to 335mm².) At full default load, its "reference" model has run noticeably cool and quiet (albeit at a 230W power draw, so not necessarily the most efficient GPU out there). While not as whisper-quiet as Nvidia's latest engineering triumphs, AMD's reference version of the 6700XT never exceeded the acoustic envelope of my CPU's cooling system.

If you have zero interest in 4K resolutions, the 6700XT is a much easier AMD recommendation than the $550 RX 6800. On this cheaper-GPU, cutting-edge PC, games dance at the 60 fps line at 1440p, while anyone who wants to crank twitchy, low-fidelity shooters on 240 fps monitors, in either 1080p or 1440p, will be in good shape with this option.

While there are certainly some cases of percentage gains and losses in comparison, I can largely say the same for Nvidia's RTX 3060 Ti, which enjoys some significant raw-power leads and benefits from Nvidia's proprietary cores. Then again, uh, have you found any of those for sale at a reasonable price as of late? No? Me neither. An RX 6700XT at a fair retail price isn't a bad call if your shopping options boil down to either buying that GPU or sitting on a half-filled pillow of 3D rendering hopes and dreams.

In the meantime, I wish you luck finding your ideal GPU in safe and reasonably priced fashion. Me, I'll be over here trying to find a copy of the Wu-Tang Clan album that Martin Shkreli bought in 2016. Let's call it a race and see who gets theirs first.

Listing image by Sam Machkovech

"sold" - Google News

March 17, 2021 at 08:00PM

https://ift.tt/3qX470n

AMD Radeon RX 6700 review: If another sold-out GPU falls in the forest… - Ars Technica

"sold" - Google News

https://ift.tt/3d9iyrC

https://ift.tt/3b37xGF

Bagikan Berita Ini

0 Response to "AMD Radeon RX 6700 review: If another sold-out GPU falls in the forest… - Ars Technica"

Post a Comment